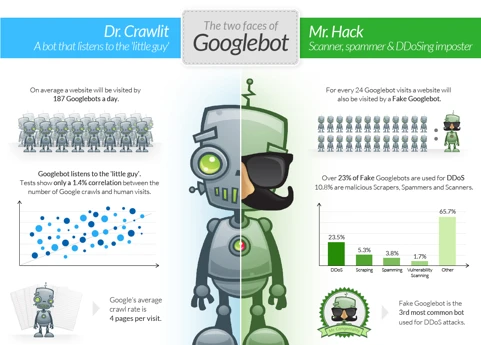

Googlebot is the web crawler that Google uses to discover and index web pages, while the Google search engine is the platform that users interact with to search for information on the internet.

What is Googlebot?

Googlebot, the legendary web crawler developed by Google, is an indispensable tool for SEO and online marketing. As a web crawler, Googlebot is responsible for discovering and indexing web pages, allowing them to appear in Google search results. It tirelessly scours the internet, following links from one page to another, and collecting information about websites. Googlebot’s mission is to assess the relevance and quality of web pages, ensuring that users receive the most accurate and valuable search results. It plays a vital role in determining a website’s visibility and ranking in search engine results pages (SERPs). Understanding how Googlebot works is crucial for optimizing your website and gaining maximum exposure in search results. By adhering to Google’s guidelines and best practices, you can ensure that Googlebot effectively crawls and indexes your website, helping you attract targeted organic traffic. To learn more about how to optimize your website for Googlebot, check out our guide on How to Use Googlebot for SEO and Online Marketing.

Why is Googlebot Important for SEO?

Googlebot plays a crucial role in SEO by determining how websites are ranked in search engine results. It crawls and indexes web pages, analyzing their content, structure, and relevance. This process allows search engines like Google to understand the content of a website and determine its quality and authority. Without Googlebot, search engines would not be able to discover and index web pages, making it impossible for websites to appear in search results. For website owners and SEO professionals, understanding how Googlebot works and optimizing their websites to be easily crawled and indexed is essential for achieving high rankings and driving organic traffic. By following SEO best practices and ensuring that your website is Googlebot-friendly, you increase your chances of appearing in relevant search results and attracting valuable organic traffic. To learn more about how to optimize your website for Googlebot, check out our guide on How to Use Googlebot for SEO and Online Marketing.

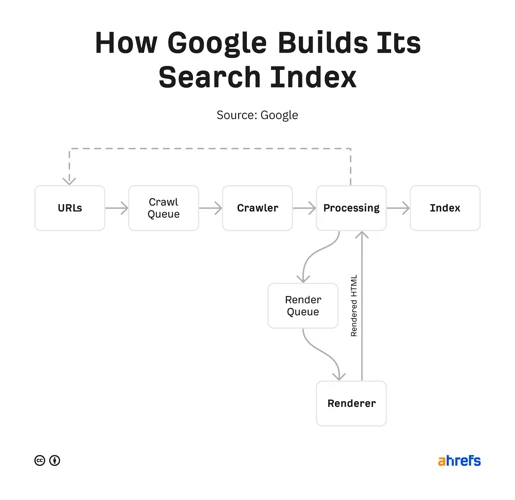

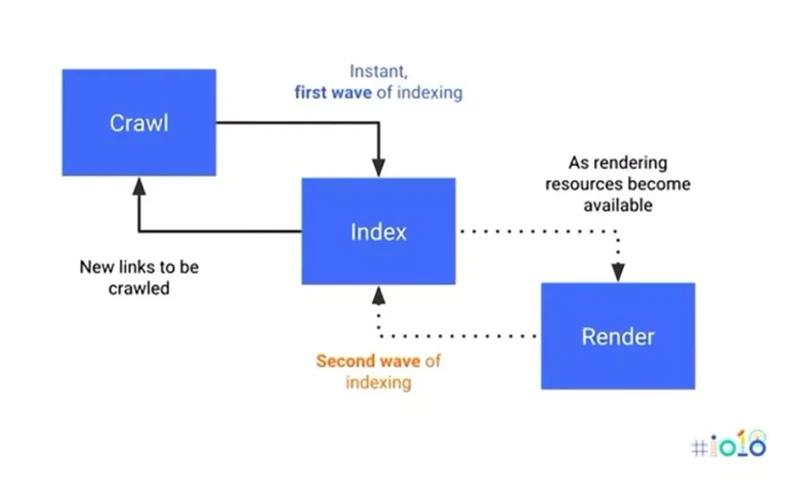

How Does Googlebot Work?

Googlebot operates through a process known as crawling and indexing. When a website is live on the internet, Googlebot starts by fetching its URL from a list of previously crawled pages. It then analyzes the content of the page, including text, images, and links. Googlebot follows these links, discovering new pages and adding them to its ever-growing index. This index serves as a massive database of web pages that Google uses to generate search results. It’s important to note that not all pages are crawled at the same frequency. Googlebot prioritizes crawling based on factors like page popularity, freshness, and importance. To ensure that Googlebot effectively crawls and indexes your website, it’s essential to have a well-structured site with clear navigation and crawlable links. Regularly updating your content and submitting an XML sitemap can also help Googlebot discover and index your pages more efficiently. For more tips on optimizing your website for Googlebot, check out our guide on How to Use Googlebot for SEO and Online Marketing.

How to Use Googlebot for SEO

To harness the power of Googlebot for SEO, there are several key steps you can take to optimize your website and improve its visibility in search engine results. Firstly, optimizing your robots.txt file is crucial. This file instructs Googlebot on which pages to crawl and which to ignore, ensuring that important pages are prioritized. Secondly, submitting an XML sitemap to Google Search Console helps Googlebot discover and index your web pages more efficiently. Additionally, avoiding blocking important pages, such as those with valuable content or high-converting landing pages, is essential to ensure they are included in search results. Monitoring crawl errors regularly allows you to identify and fix any issues that may hinder Googlebot’s ability to crawl your site effectively. Finally, testing and optimizing page speed is vital, as Googlebot prioritizes sites with faster loading times. By following these steps and implementing best practices, you can make the most of Googlebot’s capabilities and improve your website’s visibility and ranking. For more detailed information on using Googlebot for SEO, refer to our comprehensive guide on How to Use Googlebot for SEO and Online Marketing.

1. Optimize Your Robots.txt File

1. Optimize Your Robots.txt File

One of the first steps in using Googlebot effectively for SEO is optimizing your robots.txt file. The robots.txt file is a text file that tells search engine crawlers which pages or sections of your website they should or should not crawl. By properly configuring your robots.txt file, you can control how Googlebot accesses and indexes your site, ensuring that it focuses on the most important pages.

To optimize your robots.txt file, follow these steps:

1. Identify the pages you want Googlebot to crawl: Determine which pages on your website are the most valuable and relevant for search engine indexing. These can include your homepage, product pages, blog posts, and other important landing pages.

2. Disallow irrelevant or duplicate content: Use the “Disallow” directive in your robots.txt file to block Googlebot from crawling certain pages or directories that contain duplicate content, irrelevant information, or sensitive data. This can help prevent these pages from diluting your website’s overall SEO value.

3. Allow access to important files and resources: Ensure that Googlebot has access to important files, such as your CSS and JavaScript files, as well as any images or videos that are necessary for rendering your web pages correctly. This will help Googlebot understand and index your content more effectively.

4. Regularly update your robots.txt file: As your website evolves, you may add or remove pages, change your site structure, or update your content. It’s important to regularly review and update your robots.txt file to reflect these changes, ensuring that Googlebot can crawl and index your site accurately.

By optimizing your robots.txt file, you can guide Googlebot to focus on the most valuable pages of your website, improving your chances of ranking higher in search engine results. For more information on optimizing your website for search engines, check out our guide on How to Use Googlebot for SEO and Online Marketing.

2. Submit an XML Sitemap

Submitting an XML sitemap is a crucial step in optimizing your website for Googlebot. An XML sitemap is a file that lists all the pages on your website, providing valuable information about their structure and organization. By submitting this sitemap to Google Search Console, you are essentially telling Googlebot where to find your website’s content and how it is structured. This allows Googlebot to crawl and index your pages more efficiently, ensuring that they appear in relevant search results. Creating an XML sitemap is relatively simple and can be done using various tools or plugins, depending on your website’s platform. Once you have generated the sitemap, you can submit it to Google Search Console, which will notify Googlebot of its existence. This helps Googlebot discover and index your pages more effectively, increasing the chances of your website appearing in search results. To learn more about submitting an XML sitemap and other essential SEO techniques, check out our comprehensive guide on How to Use Googlebot for SEO and Online Marketing.

3. Avoid Blocking Important Pages

Avoiding the blocking of important pages is crucial when optimizing your website for Googlebot. Blocking these pages can prevent Googlebot from crawling and indexing them, which can have a negative impact on your website’s visibility in search results. One common method of blocking pages is through the use of the robots.txt file. This file tells search engine crawlers which pages or directories to exclude from being crawled. While it can be beneficial to block certain pages, such as duplicate content or private information, it’s important to ensure that you’re not inadvertently blocking important pages that you want to appear in search results. To avoid this, regularly review and update your robots.txt file to make sure that it accurately reflects your website’s structure and priorities. Additionally, you can use the Google Search Console to test how Googlebot sees your pages and identify any potential issues. By avoiding the blocking of important pages, you can ensure that Googlebot can effectively crawl and index your website, improving its visibility and overall SEO performance. To learn more about optimizing your website for Googlebot, check out our comprehensive guide on How to Use Googlebot for SEO and Online Marketing.

4. Monitor Crawl Errors

4. Monitor Crawl Errors

Monitoring crawl errors is a crucial step in optimizing your website for Googlebot. Crawl errors occur when Googlebot encounters issues while crawling and indexing your web pages. These errors can negatively impact your website’s visibility in search results, as they prevent Googlebot from properly accessing and understanding your content. By regularly monitoring crawl errors, you can identify and fix any issues that may be hindering Googlebot’s ability to crawl your site effectively.

To monitor crawl errors, you can utilize Google Search Console, a powerful tool provided by Google. Search Console provides detailed reports on crawl errors, allowing you to identify the specific pages and issues that need attention. Common crawl errors include 404 errors (page not found), server errors, and redirect errors.

Once you have identified crawl errors, it is important to take action to resolve them. This may involve fixing broken links, updating server configurations, or implementing proper redirects. By addressing crawl errors promptly, you can ensure that Googlebot can crawl and index your website without any obstacles.

Regularly monitoring crawl errors is an essential part of maintaining a healthy and well-optimized website. It allows you to detect and fix issues that may be negatively impacting your search engine rankings and overall online visibility. To learn more about how to monitor crawl errors and optimize your website for Googlebot, check out our guide on How to Add Keywords in Google Search Console.

5. Test and Optimize Page Speed

5. Test and Optimize Page Speed

Page speed is a critical factor in both SEO and online marketing. Users expect websites to load quickly, and search engines like Google take page speed into account when ranking websites. To ensure your website loads as fast as possible, you need to test and optimize its page speed. There are several tools available, such as Google PageSpeed Insights, that can analyze your website’s performance and provide recommendations for improvement. Some common strategies for optimizing page speed include:

– Compressing and minifying CSS, JavaScript, and HTML files to reduce their file size.

– Optimizing images by resizing them and using compression techniques.

– Enabling browser caching to store certain elements of your website locally on users’ devices.

– Using a content delivery network (CDN) to distribute your website’s content across multiple servers globally.

– Prioritizing above-the-fold content to ensure that the most important parts of your website load first.

By implementing these optimizations, you can significantly improve your website’s page speed, enhancing user experience, and increasing the likelihood of higher search engine rankings. For more detailed information on how to optimize your website’s page speed, refer to our comprehensive guide on How to Use Googlebot for SEO and Online Marketing.

How to Use Googlebot for Online Marketing

When it comes to online marketing, leveraging the power of Googlebot can greatly enhance your strategies and results. Here are some key steps to effectively use Googlebot for online marketing:

- Understand User Intent: Googlebot looks for relevant and high-quality content that meets user intent. By understanding what your target audience is searching for and creating content that aligns with their needs and desires, you can increase your chances of appearing in search results.

- Optimize for Featured Snippets: Featured snippets are the information boxes that appear at the top of search results. By optimizing your content to provide concise and informative answers to commonly asked questions, you can increase your chances of being featured in these snippets, driving more visibility and traffic to your website.

- Improve Mobile Friendliness: With mobile devices accounting for a significant portion of internet usage, it’s essential to ensure that your website is mobile-friendly. Googlebot considers mobile friendliness as a ranking factor, so optimizing your website for mobile devices can improve your search rankings and provide a better user experience.

- Leverage Structured Data: Structured data helps Google understand the content and context of your web pages. By implementing structured data markup, you can provide additional information to Googlebot, which can enhance your search appearance, including rich snippets, knowledge panels, and more.

- Monitor Indexing Status: Regularly monitoring your website’s indexing status is crucial to ensure that Googlebot is effectively crawling and indexing your web pages. By using tools like Google Search Console, you can identify any indexing errors or issues and take appropriate actions to resolve them.

By following these steps and staying up-to-date with Google’s guidelines, you can harness the full potential of Googlebot for your online marketing efforts and drive targeted organic traffic to your website. To learn more about how to optimize your online marketing strategies, check out our comprehensive guide on How to Use Googlebot for SEO and Online Marketing.

1. Understand User Intent

Understanding user intent is a crucial aspect of online marketing. To effectively reach your target audience and provide them with valuable content, it’s essential to comprehend what users are searching for when they enter specific queries into search engines. Googlebot plays a significant role in this process by analyzing user intent and delivering the most relevant search results. By understanding user intent, you can tailor your content to meet their needs and increase your chances of appearing prominently in search results. To better grasp user intent, consider the keywords and phrases users are searching for and the context behind their queries. Conducting keyword research and analyzing search trends can provide valuable insights into user intent. Additionally, monitoring the performance of your website and analyzing user behavior through tools like Google Analytics can help you gain a deeper understanding of what users are looking for when they visit your site. By aligning your content with user intent, you can enhance your website’s visibility and improve your online marketing efforts. For more information on how to utilize Google Trends for product research, check out our guide on How to Use Google Trends for Product Research.

2. Optimize for Featured Snippets

Optimizing for featured snippets is a powerful strategy that can significantly boost your website’s visibility and drive more traffic. Featured snippets are concise snippets of information that appear at the top of Google’s search results, providing users with direct answers to their queries. To optimize for featured snippets, there are a few key steps you can take. First, identify common questions or queries related to your industry or niche. Use tools like Google Trends or keyword research tools to uncover popular search queries. Next, create high-quality and informative content that directly answers these questions. Structure your content using headers and sub-headers to make it easier for Google to identify the relevant information. Additionally, include concise and clear answers within your content, using bullet points or numbered lists when appropriate. It’s also important to use schema markup to provide structured data that helps search engines understand the context of your content. By following these steps and optimizing your content specifically for featured snippets, you increase your chances of being selected as the featured snippet for relevant search queries. For more information on optimizing your website for SEO and online marketing strategies, check out our comprehensive guide on How to Use Googlebot for SEO and Online Marketing.

3. Improve Mobile Friendliness

Improving mobile friendliness is crucial in today’s digital landscape, where mobile devices dominate internet usage. With Google’s mobile-first indexing, ensuring that your website is mobile-friendly is not just a recommendation, but a necessity. Googlebot, as it crawls and indexes websites, takes into account the mobile version of your site to determine its ranking in search results. If your website is not optimized for mobile devices, it can result in a poor user experience, higher bounce rates, and ultimately, lower search rankings. To improve mobile friendliness, start by using a responsive design, which automatically adjusts your website’s layout and content to fit different screen sizes. This ensures that your website looks and functions well across various devices, including smartphones and tablets. Additionally, optimize your website’s loading speed, as mobile users tend to have less patience for slow-loading pages. Compressing images, minimizing CSS and JavaScript files, and leveraging browser caching can help improve loading times. Lastly, test your website on different mobile devices and browsers to identify any usability issues or design inconsistencies. By prioritizing mobile friendliness, you can enhance the user experience, increase engagement, and improve your website’s visibility in mobile search results. To learn more about optimizing your website for Googlebot and improving mobile friendliness, check out our comprehensive guide on How to Use Googlebot for SEO and Online Marketing.

4. Leverage Structured Data

Leveraging structured data is a powerful technique that can greatly enhance your website’s visibility and performance in search engine results. Structured data refers to the use of specific tags and markup language to provide search engines with additional information about the content on your website. By incorporating structured data, you can help Googlebot better understand the context and meaning of your content, resulting in more accurate and relevant search results for users. There are various types of structured data markup that you can implement, such as schema.org markup, which allows you to define specific attributes and properties for different types of content, including products, articles, events, and more. By marking up your content with structured data, you can potentially qualify for rich snippets, which are enhanced search results that display additional information, such as star ratings, reviews, and pricing. This can significantly increase the visibility and click-through rates of your web pages. To learn more about how to leverage structured data effectively and boost your website’s performance, check out our detailed guide on How to Use Googlebot for SEO and Online Marketing.

5. Monitor Indexing Status

Monitoring the indexing status of your website is crucial for ensuring that your web pages are being properly crawled and indexed by Googlebot. By regularly checking the indexing status, you can identify any potential issues or errors that may be hindering the visibility of your website in search results. To monitor the indexing status, you can use Google Search Console, a powerful tool provided by Google. Within the Search Console, you can access the Index Coverage report, which provides detailed information about the status of each of your web pages. This report will show you if any pages are experiencing errors or indexing issues, such as being blocked by robots.txt or having a “noindex” tag. By addressing these issues promptly, you can ensure that your web pages are properly indexed and available for searchers to find. To learn more about using Google Search Console and optimizing your website’s indexing status, check out our guide on How to Add Keywords in Google Search Console.

Conclusion

In conclusion, Googlebot is an invaluable tool for both SEO and online marketing. By understanding how Googlebot works and optimizing your website accordingly, you can significantly improve your website’s visibility and ranking in search engine results. This, in turn, will drive targeted organic traffic to your website and ultimately increase your online presence. Remember to optimize your robots.txt file, submit an XML sitemap, avoid blocking important pages, monitor crawl errors, and optimize page speed to ensure that Googlebot effectively crawls and indexes your website. Additionally, for online marketing purposes, it is crucial to understand user intent, optimize for featured snippets, improve mobile friendliness, leverage structured data, and monitor indexing status. By implementing these strategies and utilizing the power of Googlebot, you can take your SEO and online marketing efforts to new heights. For more in-depth information and step-by-step guidance, check out our comprehensive guide on How to Use Googlebot for SEO and Online Marketing.

Frequently Asked Questions

1. What is the difference between Googlebot and Google search engine?

Googlebot is the web crawler that Google uses to discover and index web pages, while the Google search engine is the platform that users interact with to search for information on the internet.

2. How often does Googlebot crawl websites?

Googlebot’s crawling frequency varies depending on various factors such as the website’s authority, freshness of content, and crawl budget allocated by Google. It can range from a few times a day to once every few weeks.

3. Can Googlebot access and crawl all types of websites?

Googlebot can crawl and index most types of websites, including static HTML sites, dynamic websites, and even JavaScript-based applications. However, certain types of content, such as password-protected pages or those behind paywalls, may not be accessible to Googlebot.

4. How can I optimize my website for Googlebot?

To optimize your website for Googlebot, focus on creating high-quality, relevant content, optimizing your website’s structure and navigation, improving page load speed, and ensuring mobile-friendliness. Additionally, make sure to submit an XML sitemap, optimize your robots.txt file, and monitor crawl errors.

5. What are crawl errors, and why are they important?

Crawl errors occur when Googlebot is unable to access or crawl certain pages on your website. These errors can impact your website’s visibility and SEO performance. By monitoring crawl errors through Google Search Console, you can identify and fix issues that may be preventing Googlebot from properly crawling and indexing your pages.

6. How can I check if Googlebot has crawled my website?

You can check if Googlebot has crawled your website by using the “site:” operator in Google search. Simply type “site:yourwebsite.com” into the search bar, and Google will display a list of indexed pages from your website.

7. Can I block Googlebot from crawling certain pages of my website?

Yes, you can use the robots.txt file to instruct Googlebot not to crawl specific pages or directories on your website. However, it’s important to be cautious when blocking pages, as it could potentially impact your website’s visibility in search results.

8. What is the impact of page speed on Googlebot’s crawling?

Page speed is an important factor for Googlebot’s crawling efficiency. If your website takes too long to load, Googlebot may not be able to crawl all of your pages within the allocated time, leading to incomplete indexing. Optimizing your website’s page speed can help ensure that Googlebot can efficiently crawl and index your pages.

9. Can Googlebot crawl and index images and videos?

Yes, Googlebot can crawl and index images and videos. To optimize their visibility in search results, make sure to provide descriptive filenames, alt text, and captions for images, and include relevant metadata and transcripts for videos.

10. How long does it take for changes on my website to be reflected in search results?

The time it takes for changes on your website to be reflected in search results can vary. For minor changes, it may take a few days to a week. However, for significant changes or new content, it may take several weeks for Googlebot to discover, crawl, and index the updates.