There is no set timeframe for recrawling your website on Google. It largely depends on the frequency of updates and changes you make to your site. If you regularly add new content or make significant changes, it’s a good idea to recrawl your website to ensure it is properly indexed.

Why Recrawling is Important

Recrawling your website on Google is crucial for several reasons. Enhancing search engine visibility is one of the main benefits of recrawling. When your website is properly indexed, it has a higher chance of appearing in search results, leading to increased organic traffic and potential customers. Additionally, recrawling allows you to update the indexing of new content that you have added to your website. This ensures that the latest and most relevant information is available to users. Recrawling helps you fix crawling errors that may have occurred during the initial indexing process. By identifying and resolving these errors, you can improve your website’s overall performance. So, whether you have made updates to your site, added new content, or encountered crawling issues, recrawling is essential to maintain a strong online presence.

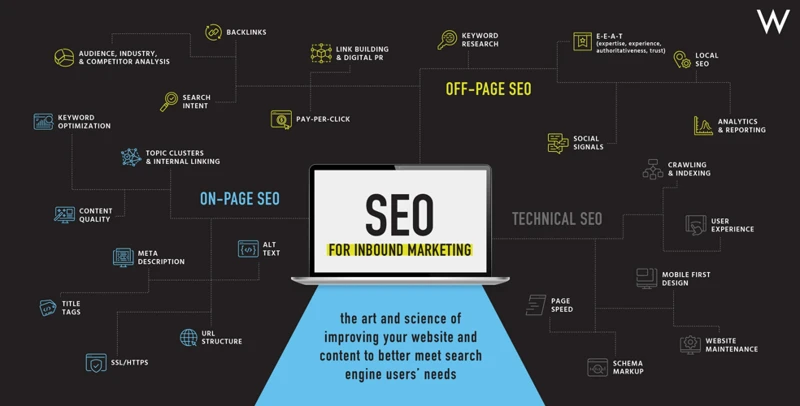

1. Enhance Search Engine Visibility

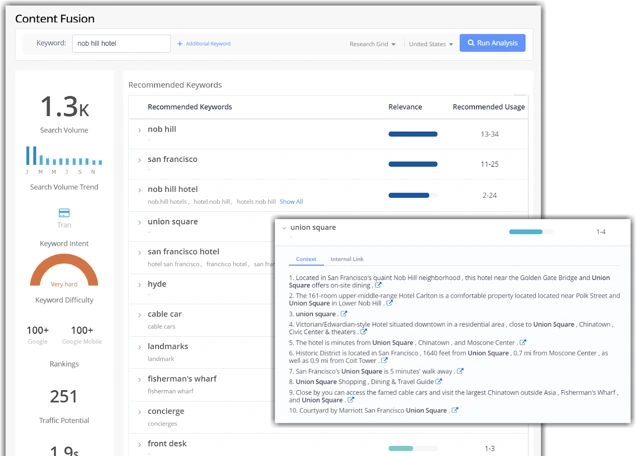

Enhancing search engine visibility is essential for driving organic traffic to your website. When your site is properly indexed and visible on search engines, it has a higher chance of appearing in relevant search results. This means that users searching for keywords related to your business or content are more likely to find and visit your website. To enhance search engine visibility, it is important to focus on optimizing your website for search engines. This includes using relevant keywords in your content, optimizing meta tags, improving website speed and performance, and building high-quality backlinks. Additionally, regularly updating your website with fresh and valuable content can also help improve search engine visibility. By following these strategies, you can increase your website’s visibility on search engines and attract more organic traffic. For more information on how to optimize your website for search engines, you can refer to our guide on how to add keywords in Google Search Console.

2. Update Indexing of New Content

Updating the indexing of new content is an important aspect of recrawling your website on Google. When you add fresh content to your site, such as blog posts, articles, or product pages, it’s crucial to ensure that they are properly indexed by search engines. Here are a few steps to follow when updating the indexing of new content:

1. Optimize your content: Before submitting your new content for indexing, make sure it is optimized for search engines. This includes using relevant keywords, writing informative meta descriptions, and structuring your content with headers and subheadings.

2. Submit your sitemap: A sitemap is a file that lists all the URLs on your website and helps search engines understand its structure. By submitting your sitemap to Google through Google Search Console, you can ensure that your new content is discovered and indexed quickly.

3. Fetch and render: Use the “Fetch as Google” tool in Google Search Console to fetch and render the URLs of your new content. This allows Googlebot to crawl and index the pages more efficiently.

4. Monitor indexing status: Keep an eye on the Index Coverage Report in Google Search Console to see if there are any issues with indexing your new content. If there are any errors or warnings, take the necessary steps to resolve them.

By following these steps, you can ensure that your new content gets indexed promptly and is visible to users searching for relevant information. Remember, regularly updating and optimizing your website’s content is key to maintaining a strong online presence and attracting organic traffic.

3. Fix Crawling Errors

When it comes to fixing crawling errors on your website, there are several important steps to take. Firstly, make sure to regularly monitor your website’s crawl errors using tools like Google Search Console. This will help you identify any issues that may be preventing Googlebot from properly crawling and indexing your site. Common crawling errors include broken links, server errors, and inaccessible pages. Once you have identified the errors, it’s time to take action. Start by fixing any broken links or redirecting them to relevant pages. Ensure that your server is properly configured and there are no issues that may be causing errors. Additionally, check for any blocked resources or pages that may be preventing proper crawling. Fixing these crawling errors is essential to ensure that your website is accessible to search engines, allowing them to properly index and rank your content. By addressing these issues, you can improve your website’s visibility and increase organic traffic. For more information on how to use Googlebot effectively, check out our comprehensive guide on how to use Googlebot.

Step 1: Review Your Website’s Current Indexing Status

To begin the recrawling process, it is important to review your website’s current indexing status. This will help you identify any issues and determine which pages are not being indexed by Google. One way to check your indexing status is to use the Index Coverage Report in Google Search Console. This report provides insights into how Google is crawling and indexing your website. It will show you the number of pages indexed, any errors encountered during crawling, and any pages that are blocked by robots.txt. By reviewing this report, you can get a clear understanding of the current state of your website’s indexing. Additionally, it is important to identify any pages that are not indexed by performing a manual search on Google using the site: operator. This will give you an idea of which pages are not appearing in search results. Once you have a comprehensive understanding of your website’s indexing status, you can move on to the next step of the recrawling process.

1. Check the Index Coverage Report

To begin recrawling your website on Google, the first step is to check the Index Coverage Report. This report provides valuable insights into which pages of your website have been indexed by Google and which ones have not. To access the Index Coverage Report, you need to have a Google Search Console account set up for your website. Once you have logged in to your Search Console account, navigate to the Index Coverage section. Here, you will find a detailed breakdown of the index status for each page on your website. The report will highlight any errors or issues that may be preventing certain pages from being indexed. By reviewing this report, you can identify which pages are not indexed and take the necessary steps to address the underlying issues. For more information on how to use the Google Search Console, check out our guide on how to use Google Trends for product research.

2. Identify Pages Not Indexed

To begin the process of recrawling your website on Google, you need to review your website’s current indexing status and identify any pages that are not indexed. This step is crucial in understanding which pages are not being properly recognized by Google’s search engine. One way to check the indexing status is to use the Index Coverage Report in Google Search Console. This report provides a comprehensive overview of how Google is indexing your website. Look for any errors or issues that may be preventing certain pages from being indexed. Another method is to manually search for specific pages using the “site:” operator in Google’s search bar. This allows you to see which pages are appearing in the search results and which ones are not. By identifying the pages that are not indexed, you can move forward with resolving the indexing issues and ensuring that all the relevant content on your website is visible to users.

Step 2: Identify Reasons for Non-Indexing

When it comes to recrawling your website on Google, it’s important to first identify the reasons for non-indexing. This step will help you understand why certain pages or content on your website are not being indexed by Google. One possible reason for non-indexing is issues with the Robots.txt file. This file tells search engines which pages to crawl and which ones to ignore. If certain pages are blocked in the Robots.txt file, they won’t be indexed. Another reason for non-indexing could be the presence of Noindex tags or directives on your website. These tags instruct search engines not to index specific pages or sections of your site. If you have unintentionally added these tags, it could be preventing Google from indexing your content. Additionally, duplicate content problems can also lead to non-indexing. If Google detects multiple pages with the same content, it may choose not to index them to avoid displaying duplicate search results. By identifying these reasons for non-indexing, you can take the necessary steps to resolve them and ensure that your website is properly indexed by Google.

1. Robots.txt File Issues

One common reason for non-indexing of web pages is robots.txt file issues. The robots.txt file is a text file that instructs search engine bots on which pages to crawl and index. If the robots.txt file is misconfigured or contains incorrect directives, it can prevent search engines from accessing and indexing certain pages on your website. To identify and resolve robots.txt file issues, you can follow these steps:

1. Review your robots.txt file: Check if there are any blocking directives that may be preventing search engines from crawling certain pages. Make sure that important pages are not unintentionally blocked.

2. Verify the syntax: Ensure that the syntax of your robots.txt file is correct. Even a small error can lead to issues in crawling and indexing.

3. Use the robots.txt testing tool in Google Search Console: This tool allows you to test your robots.txt file and see how it affects crawling and indexing. It can help you identify any issues and suggest improvements.

4. Submit your updated robots.txt file to Google: Once you have made the necessary changes, submit your updated robots.txt file through Google Search Console to ensure that search engines can access and crawl your website properly.

By resolving robots.txt file issues, you can ensure that search engines have unrestricted access to your website, leading to improved indexing and visibility in search results.

2. Noindex Tags or Directives

Noindex tags or directives can prevent search engines from indexing specific pages or sections of your website. These tags are commonly used when you want to hide certain content from search engine results. However, if these tags are mistakenly applied to important pages or sections, it can result in those pages not being indexed by search engines. This can negatively impact your website’s visibility and organic traffic. To identify if you have any pages with noindex tags or directives, you can review your website’s source code or use tools like Google Search Console. Once you have identified the pages with noindex tags, you can remove or update them to allow search engines to index those pages. It’s important to regularly check for and resolve any issues related to noindex tags or directives to ensure that your website’s content is fully indexed and accessible to users and search engines alike.

3. Duplicate Content Problems

Duplicate content problems can negatively impact your website’s indexing and search engine rankings. When search engines encounter duplicate content, they may have difficulty determining which version of the content to index and display in search results. This can result in lower visibility and reduced organic traffic for your website. Duplicate content can arise from various sources, such as multiple URLs leading to the same content, printer-friendly versions of web pages, or content syndication. It is important to identify and resolve duplicate content issues to ensure that search engines properly index and rank your website. One way to address duplicate content is by implementing canonical tags, which indicate the preferred version of a web page. Additionally, regularly auditing your website for duplicate content and implementing proper redirects can help prevent indexing and ranking issues. By resolving duplicate content problems, you can improve your website’s overall visibility and search engine performance.

Step 3: Resolve Non-Indexing Issues

To resolve non-indexing issues on your website, follow these steps:

1. Update Robots.txt File: The robots.txt file is a text file that instructs search engine bots on which pages to crawl and index. Check if there are any restrictions in your robots.txt file that may be preventing certain pages from being indexed. Make sure to remove any disallow directives that are blocking important content.

2. Remove Noindex Tags or Directives: Noindex tags or directives can be added to specific pages or sections of your website to prevent them from being indexed. Review your website’s code and content management system to ensure that noindex tags or directives are mistakenly applied. Remove any instances of these tags or directives for the pages you want to be indexed.

3. Resolve Duplicate Content Issues: Duplicate content can confuse search engines and affect your website’s indexing. Identify and resolve any instances of duplicate content on your website, whether it’s duplicate pages, similar content across multiple URLs, or content copied from other sources. Use canonical tags to indicate the preferred version of duplicate pages.

By addressing these non-indexing issues, you can improve the chances of your website being properly crawled and indexed by search engines, resulting in better visibility and organic traffic.

1. Update Robots.txt File

To resolve non-indexing issues, the first step is to update your Robots.txt file. This file tells search engine crawlers which parts of your website to crawl and which parts to ignore. By making changes to this file, you can control how search engines access and index your content. Start by accessing your website’s root directory and locating the Robots.txt file. Open the file and review its contents. Look for any rules that may be preventing search engines from crawling certain pages or directories. If you find any outdated or incorrect directives, make the necessary updates. For example, if you want search engines to crawl a previously blocked page, remove the disallow directive for that specific page. Once you have made the necessary changes, save the file and upload it back to your website’s root directory. This will allow search engines to properly crawl and index your website. Remember to regularly review and update your Robots.txt file to ensure that it aligns with your website’s content and goals.

2. Remove Noindex Tags or Directives

When it comes to recrawling your website on Google, it’s important to identify and remove any Noindex tags or directives that may be preventing certain pages from being indexed. Noindex tags or directives are HTML meta tags that instruct search engines not to include a particular page in their index. This could be intentional, such as when you don’t want a specific page to be searchable, or it could be unintentional, resulting from misconfigurations or outdated settings.

To remove the Noindex tags or directives, you need to review your website’s source code or content management system. Look for any instances of the “noindex” attribute in the HTML code, as well as any robots.txt file directives that block search engine access to certain pages.

Once you have identified the pages with Noindex tags or directives, you can proceed to remove them. Simply delete or update the relevant HTML code, ensuring that the pages are now set to be indexed by search engines. This will allow Google to recrawl and index the previously excluded pages, improving their visibility in search engine results.

Remember to regularly check for any new instances of Noindex tags or directives that may arise, especially when making changes to your website’s structure or content. Ensuring that your pages are correctly indexed will help maximize your website’s visibility and increase its chances of ranking higher in search engine results.

3. Resolve Duplicate Content Issues

Duplicate content can be detrimental to your website’s search engine rankings. When search engines like Google encounter duplicate content, they may struggle to determine which version is the most relevant and valuable to users. This can result in lower rankings or even penalties for your website. To resolve duplicate content issues, there are a few strategies you can employ. Firstly, you can use the rel=”canonical” tag to indicate the preferred version of a page. This tag tells search engines that the specified URL is the original or canonical version of the content, helping to consolidate ranking signals. Another option is to use 301 redirects to redirect duplicate content to the original page. This ensures that search engines understand that the duplicate pages are no longer relevant and should be replaced by the original version. Additionally, you can also utilize the parameter handling tool in Google Search Console to instruct search engines on how to handle duplicate content caused by URL parameters. By taking these steps to resolve duplicate content issues, you can improve your website’s search engine rankings and provide a better user experience.

Step 4: Request Google to Recrawl Your Website

To request Google to recrawl your website, follow these simple steps:

Google Search Console is a powerful tool that allows you to monitor and manage your website’s presence in Google search results. To request a recrawl, log in to your Google Search Console account and select your website property. Then, navigate to the “URL Inspection” tool. Enter the URL of the page you want Google to recrawl and click on the “Request Indexing” button. Google will then prioritize the recrawling of that particular page.

Submitting a sitemap to Google is another effective way to request a recrawl of your entire website. A sitemap is a file that lists all the URLs on your site and provides important information about each page. To submit a sitemap, access your Google Search Console account and navigate to the “Sitemaps” section. Click on the “Add/Test Sitemap” button and enter the URL of your sitemap. Google will then crawl your sitemap and recrawl the pages listed within it.

By following these steps, you can ensure that Google recrawls your website promptly and updates its index with your latest content and changes. This will help improve your website’s visibility and ensure that it accurately reflects your online presence.

1. Use Google Search Console

To request Google to recrawl your website, one effective method is to use Google Search Console. Here’s how you can do it:

1. Sign in to Google Search Console: Access the Google Search Console dashboard by logging in to your Google account and selecting your website property.

2. Select the correct property: If you have multiple websites registered, make sure you choose the correct property that you want to recrawl.

3. Navigate to the URL Inspection tool: In the left-hand menu, click on “URL Inspection” under the “Index” section.

4. Enter the URL: Type the URL of the specific page you want to recrawl into the search bar.

5. Inspect the URL: Click on the “Inspect” button to initiate the inspection process. Google will analyze the URL and provide information about its current status.

6. Request indexing: If Google determines that the URL is not indexed or needs to be recrawled, you will see a message indicating this. To request indexing, click on the “Request Indexing” button.

7. Submit the sitemap: To speed up the recrawling process for multiple pages, it’s recommended to submit a sitemap. In the Google Search Console dashboard, go to the “Sitemaps” section and add your sitemap URL.

Using Google Search Console is a straightforward and effective way to request recrawling of your website. By following these steps, you can ensure that your website’s latest content is indexed and available in Google’s search results.

2. Submit a Sitemap

Submitting a sitemap is an important step in recrawling your website on Google. By providing a sitemap to Google, you are making it easier for the search engine to discover and index your web pages. Here’s how you can submit a sitemap:

1. Create a sitemap: Use a sitemap generator tool or a plugin if you’re using a content management system (CMS) like WordPress. A sitemap is a file that lists all the pages on your website in a hierarchical structure.

2. Verify your website: Ensure that you have verified ownership of your website using Google Search Console. This step is necessary to access the necessary features and tools.

3. Access the Sitemaps report: In Google Search Console, navigate to the “Sitemaps” section. Here, you will see a list of any previously submitted sitemaps.

4. Add a new sitemap: Click on the “Add/Test Sitemap” button and enter the URL of your sitemap. It should typically be in the format “https://www.example.com/sitemap.xml”.

5. Submit the sitemap: Click on the “Submit” button to notify Google about your sitemap. Google will then proceed to crawl and index the pages listed in your sitemap.

6. Monitor the sitemap: Keep an eye on the sitemap report in Google Search Console to check for any errors or issues with your sitemap. Fix any problems promptly to ensure proper indexing of your web pages.

Submitting a sitemap is an effective way to communicate the structure and content of your website to Google. It helps the search engine understand your site’s organization and index your pages more efficiently. By regularly updating and submitting your sitemap, you can ensure that Google recrawls and indexes your website accurately.

Conclusion

In conclusion, recrawling your website on Google is a crucial step to ensure search engine visibility, update indexing of new content, and fix crawling errors. By following the step-by-step guide provided in this article, you can review your website’s current indexing status, identify reasons for non-indexing, resolve any issues, and request Google to recrawl your website. Taking these actions will help improve your website’s performance, increase organic traffic, and ultimately enhance your online presence. Remember, staying on top of recrawling is an ongoing process, as you should regularly check and update your website to keep it optimized for search engines. So, don’t neglect the importance of recrawling and take the necessary steps to ensure your website is properly indexed and easily found by users.

Frequently Asked Questions

1. How often should I recrawl my website on Google?

There is no set timeframe for recrawling your website on Google. It largely depends on the frequency of updates and changes you make to your site. If you regularly add new content or make significant changes, it’s a good idea to recrawl your website to ensure it is properly indexed.

2. Can I recrawl specific pages instead of my entire website?

Yes, you can recrawl specific pages by using the URL Inspection tool in Google Search Console. This allows you to request indexing for individual pages that you want to be re-crawled by Google.

3. What should I do if my website is not appearing in Google search results?

If your website is not appearing in Google search results, it may not be properly indexed. You can follow the steps outlined in this guide to review your website’s indexing status, identify the reasons for non-indexing, and resolve any issues that may be preventing your website from being indexed.

4. How can I check if my website has duplicate content issues?

You can use tools like Copyscape or Siteliner to check for duplicate content on your website. These tools will scan your website and identify any instances of duplicate content, allowing you to take necessary actions to resolve the issue.

5. What are common reasons for pages not being indexed?

Common reasons for pages not being indexed include having a robots.txt file that blocks search engine crawlers, using noindex tags or directives, or having duplicate content issues. By reviewing your website’s indexing status and following the steps in this guide, you can identify and resolve these issues.

6. Can I recrawl my website on Google without using Google Search Console?

While using Google Search Console is the recommended method for requesting a recrawl, you can also indirectly prompt Google to recrawl your website by creating new backlinks to your site or by submitting a sitemap to Google.

7. How long does it take for Google to recrawl my website?

The time it takes for Google to recrawl your website can vary. Generally, it can take anywhere from a few hours to several days for Google to recrawl your website and update its index. It’s important to be patient and allow Google’s crawlers to do their work.

8. Can I recrawl my website on Google multiple times?

Yes, you can recrawl your website on Google multiple times, especially if you make frequent updates or changes to your website’s content. However, it’s important to ensure that your website follows Google’s guidelines and best practices to avoid any penalties.

9. Should I submit a sitemap every time I recrawl my website?

Submitting a sitemap is not necessary every time you recrawl your website. However, if you have made significant changes to your website’s structure or added a large amount of new content, submitting an updated sitemap can help Google understand and index your site more efficiently.

10. Can I request Google to recrawl specific URLs instead of my entire website?

Yes, you can request Google to recrawl specific URLs by using the URL Inspection tool in Google Search Console. This allows you to individually submit URLs for recrawling, ensuring that specific pages are updated in Google’s index.