Web scraping is the process of extracting data from websites. It involves using automated tools or scripts to access and gather information from web pages, such as text, images, and links.

Understanding Search Engines

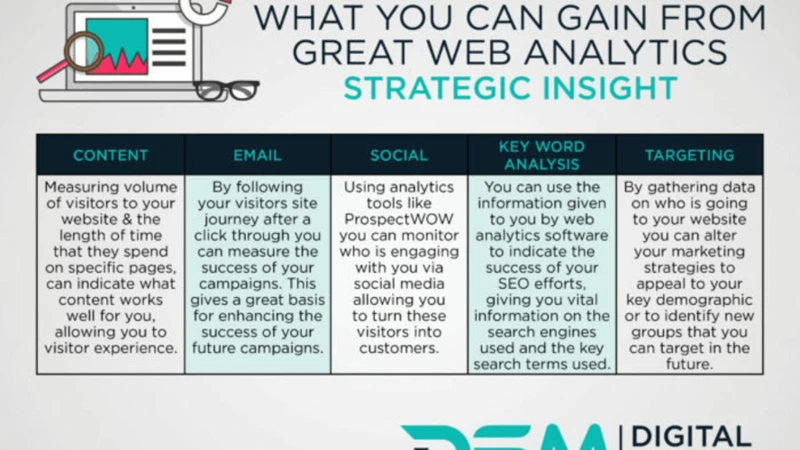

Search engines are powerful tools that help users find relevant information on the internet. They work by indexing and organizing vast amounts of web pages, making it easier for users to discover the content they are looking for. To understand how search engines function, it’s important to grasp the fundamental concepts they rely on.

Crawling: Search engines employ web crawlers, also known as spiders or bots, to navigate the internet and discover new web pages. These crawlers follow links from one page to another, collecting data along the way. They essentially create a map of the internet, which is later used for indexing.

Indexing: Once a web page is discovered, it is analyzed and stored in a massive database called an index. This index contains information about the content, structure, and relevance of each web page. It allows search engines to retrieve relevant results quickly when a user performs a search query.

Ranking: Search engines use complex algorithms to determine the ranking of web pages in search results. These algorithms take into account various factors, such as the relevance of the content to the search query, the authority and credibility of the website, and the overall user experience.

Search Queries: When a user enters a search query, the search engine retrieves relevant web pages from its index and presents them in the search results. The search engine aims to provide the most relevant and useful results based on the user’s query.

By understanding these key components of search engines, you can gain insight into how they work and make informed decisions when creating your own search engine. It is also essential to stay updated on the latest trends and advancements in search engine technology to ensure your search engine remains efficient and effective.

Choosing a Programming Language

Choosing a Programming Language: Selecting the right programming language is crucial when creating a search engine. Different languages offer various advantages and considerations, so it’s essential to evaluate your project requirements and goals before making a decision.

Python: Python is a popular choice for search engine development due to its simplicity and readability. It offers a wide range of libraries and frameworks that are specifically designed for web scraping, data manipulation, and natural language processing. Python’s versatility and extensive community support make it an excellent option for building a search engine.

Java: Java is a robust and scalable programming language widely used in enterprise-level applications. It provides excellent performance and is suitable for handling large datasets. Java’s object-oriented approach and extensive libraries make it a reliable choice for building complex search engine systems.

C++: C++ is a high-performance language commonly used in system-level programming. It offers low-level control over hardware resources, making it suitable for building search engines that require efficient memory management and fast processing speeds. C++ is often preferred for developing search engine components like web crawlers and indexing algorithms.

PHP: PHP is a server-side scripting language primarily used for web development. It has a vast ecosystem of frameworks and libraries that can be leveraged for search engine development. PHP’s simplicity and ease of integration with databases make it a practical choice for building search engines that prioritize web scraping and data handling.

When choosing a programming language, consider factors such as your familiarity with the language, the availability of relevant libraries and frameworks, the scalability and performance requirements of your search engine, and the level of community support. By carefully evaluating these considerations, you can select the programming language that best suits your needs and ensures the successful development of your search engine.

Gathering Data

Gathering data is a critical step in creating a search engine. There are two primary methods for collecting data: web scraping and API integration.

Web scraping involves extracting information from websites by parsing the HTML code. This method allows you to gather data from any website, even if they don’t provide an API. However, it’s important to respect website policies and guidelines to avoid legal issues. You can use tools like BeautifulSoup in Python to scrape web pages efficiently.

API integration involves accessing data from web services through their APIs. Many websites offer APIs that allow developers to retrieve specific data in a structured format. This method is more reliable and efficient as it provides direct access to the desired information. For example, you can integrate with social media APIs to gather data from platforms like Twitter or Facebook.

Regardless of the method you choose, it’s crucial to ensure the data you gather is relevant, accurate, and up-to-date. Regularly updating your data ensures that your search engine provides the most current and useful information to users. It’s also essential to respect website policies and terms of service when collecting data to maintain ethical practices.

Web Scraping

Web scraping is a technique used to extract data from websites. It involves writing code to automate the process of gathering information from web pages. There are various tools and libraries available that can assist in web scraping, such as BeautifulSoup and Selenium.

When it comes to creating a search engine, web scraping plays a crucial role in gathering data from the internet. The web scraper visits different websites, navigates through their pages, and extracts relevant information. This data is then stored and used for indexing and ranking purposes.

Web scraping can be used to collect a wide range of data, including text, images, links, and metadata. For example, when building a search engine, the web scraper can extract the title, URL, and content of each web page. It can also crawl through the website’s internal links to gather more data and create a comprehensive index.

It is important to note that while web scraping can be a powerful tool, it should be used ethically and within the bounds of the law. It is essential to respect the website’s terms of service and not overload their servers with excessive requests. Additionally, some websites may have measures in place to prevent scraping, such as CAPTCHAs or IP blocking.

Web scraping is a valuable technique for gathering data when creating a search engine. It allows you to extract relevant information from web pages and build a comprehensive index for efficient searching. By understanding the principles of web scraping and using it responsibly, you can ensure the success of your search engine project.

API Integration

API Integration: In the process of gathering data for your search engine, one important method to consider is API integration. An API, or Application Programming Interface, allows different software applications to communicate and exchange data with each other. By integrating relevant APIs into your search engine, you can access a wide range of data sources and retrieve information in a structured and efficient manner.

API integration provides several benefits for your search engine. Firstly, it allows you to tap into external databases and services, such as social media platforms, news websites, or online directories. This expands the scope of your search engine, enabling it to provide more comprehensive and up-to-date results to users.

Additionally, API integration can enhance the relevance and quality of the data you gather. For example, by integrating an API that provides location data, you can offer location-based search results to users, making their searches more personalized and targeted.

When integrating APIs into your search engine, it is crucial to choose reputable and reliable sources. Look for APIs that provide accurate and relevant data, and ensure that they have proper documentation and support. This will help you avoid any potential issues or discrepancies in the data retrieved.

It is important to handle API requests efficiently to avoid overloading your system or violating any usage limits set by the API provider. Implementing caching mechanisms and optimizing API calls can help improve the performance and responsiveness of your search engine.

API integration is a valuable tool in the data gathering process for your search engine. By leveraging the power of APIs, you can access a wealth of information from various sources, enriching the content and functionality of your search engine.

Building the Index

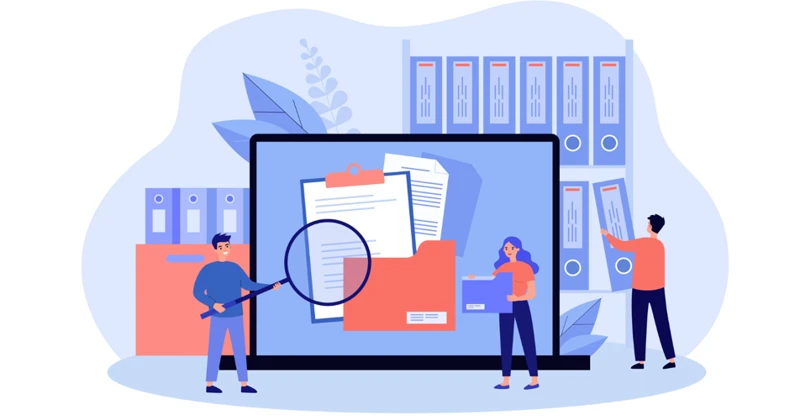

Building the index is a crucial step in creating a search engine. It involves two main processes: document parsing and inverted indexing.

Document Parsing: During document parsing, the search engine analyzes the content and structure of each web page. This process extracts important information such as the title, headings, paragraphs, and meta tags. By understanding the structure of the document, the search engine can better determine its relevance to search queries.

Inverted Indexing: Inverted indexing is the method used by search engines to organize and store information about web pages. It creates an inverted index, which maps keywords or terms to the documents in which they appear. This allows for efficient retrieval of relevant web pages based on user search queries. The inverted index contains information such as the frequency of terms and their location within the document.

By building a comprehensive index, search engines can quickly retrieve relevant results when users perform searches. It enables efficient searching and retrieval of information from the vast amount of data available on the web. The index forms the backbone of the search engine, ensuring that users can find the most relevant information quickly and accurately. To learn more about how to check if a page is indexed, you can refer to this guide.

Document Parsing

Document parsing is a crucial step in the process of creating a search engine. It involves extracting relevant information from web pages and organizing it in a structured format that can be easily analyzed and indexed. Here are the key aspects of document parsing:

HTML Parsing: Web pages are typically written in HTML, a markup language that defines the structure and presentation of content on the internet. To parse a web page, the search engine needs to understand the underlying HTML code. It does this by parsing the HTML document and extracting important elements such as headings, paragraphs, links, and images.

Text Extraction: Once the HTML document is parsed, the search engine focuses on extracting the textual content from the web page. This includes the body text, titles, metadata, and other relevant information. The search engine may also remove any HTML tags or formatting to obtain only the raw text.

Tokenization: Tokenization is the process of breaking down the extracted text into smaller units called tokens. These tokens can be individual words, numbers, or other meaningful units. Tokenization allows the search engine to analyze the text at a granular level and perform operations such as counting word frequencies or identifying patterns.

Language Processing: Document parsing often involves language processing techniques to handle language-specific aspects such as stemming, stop word removal, and synonym identification. These techniques help improve the accuracy and relevance of search results by accounting for variations in word forms and meanings.

Metadata Extraction: In addition to the textual content, document parsing may also involve extracting metadata from web pages. Metadata includes information such as the title of the page, author name, publication date, and keywords. This metadata can provide valuable insights for indexing and ranking purposes.

Document parsing plays a vital role in transforming unstructured web page content into a structured format that can be efficiently processed and indexed by a search engine. By understanding the intricacies of document parsing, you can optimize your search engine to extract relevant information and provide accurate search results to users. For more information on how to add keywords to your website’s HTML, check out this helpful guide: How to Add Keywords to Your Website’s HTML.

Inverted Indexing

Inverted Indexing: In the process of building a search engine, one crucial step is creating an inverted index. This indexing technique plays a vital role in improving the efficiency and speed of retrieving search results.

An inverted index is essentially a data structure that maps keywords or terms to the documents in which they appear. Unlike traditional indexing, where documents are mapped to keywords, inverted indexing flips this relationship, allowing for faster search queries.

To create an inverted index, the search engine analyzes the content of each document and extracts the relevant keywords or terms. These terms are then added to the index, along with a reference to the document in which they appear. This allows for quick retrieval of documents containing specific keywords.

The inverted index is typically implemented using a data structure known as a hash table or a trie. Each keyword or term is hashed or mapped to a specific location in the data structure, making it efficient to retrieve documents associated with a particular keyword.

When a user enters a search query, the search engine looks up the keywords in the inverted index and retrieves the documents associated with those keywords. This process eliminates the need to search through every document in the index, significantly improving search performance.

Inverted indexing also enables the search engine to handle complex queries efficiently. By combining multiple keywords or terms, the search engine can quickly identify the documents that contain all the specified keywords or terms.

Inverted indexing is a fundamental component of a search engine’s architecture. It allows for efficient retrieval of search results based on keywords or terms, making the search process fast and accurate. By implementing inverted indexing in your search engine, you can enhance its search capabilities and provide users with relevant results in a fraction of a second.

Ranking and Retrieval

Ranking and retrieval are crucial aspects of a search engine’s functionality. Once a search engine has gathered and indexed a vast amount of data, it needs to determine the relevance and order of the search results. One of the key algorithms used for ranking is the PageRank Algorithm. This algorithm evaluates the authority and importance of web pages by analyzing the number and quality of incoming links. Another important factor in ranking is the Term Frequency-Inverse Document Frequency (TF-IDF) algorithm. This algorithm assesses the importance of specific keywords by considering their frequency in a document and their rarity across the entire collection of documents. By utilizing these ranking algorithms, search engines can provide users with the most relevant and useful results for their search queries. To learn more about how to get edu links, check out our comprehensive guide on building high-quality backlinks from educational websites.

PageRank Algorithm

The PageRank algorithm is a crucial component of search engine ranking systems. Developed by Larry Page and Sergey Brin, the founders of Google, PageRank revolutionized the way search engines determine the relevance and importance of web pages.

Link Analysis: The PageRank algorithm analyzes the links between web pages to assess their importance. It assigns a numerical value, known as PageRank score, to each page based on the number and quality of incoming links it receives. Pages with higher PageRank scores are considered more authoritative and are likely to rank higher in search results.

Importance of Incoming Links: The algorithm considers each incoming link to a web page as a vote of confidence. However, not all votes are equal. The quality and relevance of the linking page are taken into account. A link from a highly authoritative website carries more weight than a link from a less reputable source.

Passing PageRank: When a web page links to another page, it passes a portion of its own PageRank score to the linked page. This passing of PageRank helps distribute authority throughout the web and contributes to the overall ranking of pages.

Iterative Calculation: The PageRank algorithm iteratively calculates the PageRank scores of web pages. It starts by assigning an initial value to each page and then updates the scores based on the incoming links and their respective PageRank scores. This process continues until the PageRank scores converge and stabilize.

Relevance and Authority: The PageRank algorithm aims to provide users with the most relevant and authoritative results. By considering both the content relevance and the importance of incoming links, the algorithm helps search engines deliver high-quality search results.

Understanding the PageRank algorithm is crucial for creating a search engine that accurately ranks web pages. It is important to note that while PageRank used to be the primary factor in search engine ranking, modern search engines use a combination of algorithms that consider various factors, including relevance, user behavior, and content quality, to provide the best search results. By staying informed about these advancements, you can ensure your search engine remains competitive and effective in the ever-evolving digital landscape.

Term Frequency-Inverse Document Frequency (TF-IDF)

is a numerical statistic that is widely used in search engines to determine the relevance of a document to a given search query. It is based on the idea that the more times a term appears in a document, the more important and relevant that term is to the document. TF-IDF combines two important metrics: term frequency (TF) and inverse document frequency (IDF).

Term Frequency (TF) measures the frequency of a term within a document. It is calculated by dividing the number of times a term appears in a document by the total number of terms in that document. TF gives a higher weight to terms that appear more frequently, as they are likely to be more relevant to the overall content of the document.

Inverse Document Frequency (IDF) measures the importance of a term across a collection of documents. It is calculated by dividing the total number of documents by the number of documents that contain the term. IDF gives a higher weight to terms that appear in fewer documents, as they are considered to be more rare and potentially more significant.

To calculate the TF-IDF score for a term in a document, the term frequency is multiplied by the inverse document frequency. The result is a score that represents the importance of the term in the context of the entire collection of documents.

Search engines use TF-IDF scores to determine the relevance of a document to a search query. Documents that have a higher TF-IDF score for a particular term are considered more relevant and are more likely to be ranked higher in the search results.

By utilizing TF-IDF in your search engine, you can improve the accuracy and relevancy of the search results. It allows you to consider both the frequency of a term within a document and its significance across the entire collection of documents. This helps ensure that the most relevant and useful documents are presented to users when they perform a search query.

To learn more about improving the ranking of your website in search engine results, you can explore strategies such as obtaining high-quality .edu backlinks. These types of backlinks can enhance the credibility and authority of your website, which can positively impact your search engine ranking.

Designing the User Interface

Designing the user interface (UI) is a crucial step in creating a search engine that is visually appealing and user-friendly. The UI should provide a seamless and intuitive experience for users to interact with the search engine. Front-end development plays a significant role in implementing the UI design, using HTML, CSS, and JavaScript to create the layout, visual elements, and interactive features. It is important to consider factors such as responsiveness, accessibility, and cross-browser compatibility when designing the UI to ensure that it can be accessed and used effectively across different devices and platforms. Additionally, implementing a robust search functionality is essential for users to input their queries and receive relevant search results. By focusing on the design and functionality of the user interface, you can enhance the overall user experience and make your search engine more engaging and user-friendly.

Front-end Development

Front-end development is a crucial aspect of creating a search engine, as it involves designing and implementing the user interface that users interact with. Here are some key considerations when it comes to front-end development:

User Interface Design: The user interface (UI) should be visually appealing and intuitive to navigate. It should provide users with easy access to search functionality and display search results in a clear and organized manner. Consider using responsive design techniques to ensure your search engine is accessible on different devices and screen sizes.

HTML and CSS: HTML (Hypertext Markup Language) is the backbone of any web page and is used to structure the content. CSS (Cascading Style Sheets) is used to define the visual appearance of the search engine, including colors, fonts, layout, and more. Make sure to write clean and semantic HTML code and use CSS to create a consistent and visually pleasing design.

JavaScript: JavaScript is a programming language used to add interactivity and dynamic features to web pages. In the context of a search engine, JavaScript can be used to enhance the user experience by implementing features such as autocomplete suggestions, real-time search results, and pagination.

Accessibility: It is important to ensure that your search engine is accessible to users with disabilities. Follow accessibility guidelines such as using alt tags for images, providing keyboard navigation options, and ensuring proper color contrast for readability.

Performance Optimization: Front-end performance plays a crucial role in user satisfaction. Optimize your search engine by minimizing the use of external resources, such as large images or unnecessary JavaScript libraries. Compress and minify your HTML, CSS, and JavaScript files to reduce load times.

By focusing on these aspects of front-end development, you can create a visually appealing, user-friendly, and efficient user interface for your search engine, enhancing the overall user experience.

Search Functionality

Search functionality is a crucial aspect of any search engine. It determines how users interact with the search engine and find the information they are looking for. When designing the search functionality for your search engine, there are several key considerations to keep in mind.

Query Processing: The first step in search functionality is processing the user’s search query. This involves analyzing the query to understand the user’s intent and extracting the relevant keywords. Advanced techniques such as natural language processing can be used to improve the accuracy of query processing.

Matching Algorithm: Once the query is processed, the search engine needs to match it with the relevant web pages in its index. This is done using a matching algorithm that compares the keywords in the query with the keywords and content of each web page. The algorithm can take into account factors such as keyword relevance, proximity, and semantic meaning.

Ranking and Sorting: After the matching algorithm identifies the relevant web pages, the search engine needs to rank and sort them based on their relevance to the user’s query. This involves applying ranking algorithms that consider factors such as keyword density, page authority, and user engagement signals. The goal is to present the most relevant and useful results at the top of the search results page.

Filtering and Refinement: To enhance the user experience, search functionality should include filtering and refinement options. Users may want to narrow down their search results based on specific criteria such as date, location, or content type. Providing these options allows users to find the most relevant information quickly.

Autocomplete and Suggestions: Another important aspect of search functionality is providing autocomplete suggestions as users type their search queries. This can help users refine their queries and discover related or popular search terms. Autocomplete suggestions can be generated based on historical search data or real-time user behavior.

By implementing robust and user-friendly search functionality, you can enhance the user experience and make your search engine more effective in delivering relevant results. Continuously improving and refining the search functionality based on user feedback and data analysis will ensure that your search engine remains competitive in the ever-evolving landscape of search technology.

Testing and Optimization

Testing and optimization are crucial steps in the development of a search engine. Quality Assurance: Conducting thorough testing ensures that all components of the search engine are functioning correctly. This includes checking for any bugs or errors, testing the accuracy of search results, and evaluating the user interface for usability and accessibility. It is important to test the search engine across multiple devices and browsers to ensure a seamless user experience. Performance Optimization: Optimization techniques are implemented to improve the performance and speed of the search engine. This may involve optimizing the code, database queries, and server configurations. Additionally, caching mechanisms can be employed to store frequently accessed data, reducing the load on the server and enhancing the search engine’s responsiveness. Regular monitoring and performance testing help identify areas for improvement and ensure that the search engine is delivering results efficiently. By prioritizing testing and optimization, you can enhance the user experience and ensure the smooth functioning of your search engine.

Quality Assurance

Quality assurance is a crucial step in the development process of a search engine. It involves testing and evaluating the functionality, usability, and performance of the search engine to ensure that it meets the highest standards of quality. Here are some key aspects to consider during the quality assurance phase:

Functionality Testing: This involves testing all the features and functionalities of the search engine to ensure that they work as intended. Testers need to verify that search queries are executed correctly, search results are displayed accurately, and any additional features such as filters or sorting options are functioning properly. It is essential to identify and fix any bugs or issues that may arise during this testing phase.

Usability Testing: Usability testing focuses on evaluating the user experience of the search engine. Testers simulate real-life scenarios to gauge how easily users can navigate the search interface, understand the search results, and perform common tasks such as refining searches or accessing advanced search options. This testing helps identify any usability issues or areas for improvement in terms of user interface design and overall user satisfaction.

Performance Testing: Performance testing aims to assess the speed, responsiveness, and scalability of the search engine. Testers measure the time it takes for search queries to be processed, the speed at which search results are displayed, and the overall performance under different loads or levels of user traffic. This testing helps identify any bottlenecks or performance issues that may affect the search engine’s efficiency.

Compatibility Testing: Compatibility testing ensures that the search engine functions correctly across different browsers, devices, and operating systems. Testers verify that the search engine is responsive and displays properly on various screen sizes and resolutions. They also check for compatibility with different browsers and ensure that all features and functionalities work seamlessly across different platforms.

Security Testing: Security testing is essential to identify and fix any vulnerabilities or weaknesses in the search engine’s security measures. Testers assess the system for potential threats, such as SQL injections, cross-site scripting, or unauthorized access. They also verify that user data is encrypted and stored securely, and that all privacy regulations and best practices are followed.

By conducting thorough quality assurance testing, search engine developers can ensure that their product is reliable, user-friendly, and performs optimally. This helps to build trust among users and enhances the overall search experience.

Performance Optimization

Performance optimization is a crucial aspect of creating a search engine. It involves making strategic changes to improve the speed and efficiency of your search engine, ensuring that it can handle a large volume of queries and deliver results quickly. Here are some key areas to focus on when optimizing the performance of your search engine:

Caching: Implementing caching mechanisms can significantly improve the performance of your search engine. Caching involves storing frequently accessed data in temporary storage, such as memory, to reduce the need for repetitive computations or database queries. By caching search results or frequently accessed web pages, you can reduce the processing time and improve the overall responsiveness of your search engine.

Indexing Optimization: Optimizing the indexing process can have a significant impact on the performance of your search engine. This includes efficient document parsing and indexing techniques. By optimizing the way your search engine parses and indexes web pages, you can reduce the time it takes to process new content and update the index, leading to faster search results.

Query Optimization: Optimizing the way search queries are processed is essential for improving search engine performance. This involves optimizing the algorithms used for ranking and retrieval, as well as implementing techniques like query caching and query rewriting. By fine-tuning these processes, you can reduce the time it takes to process search queries and deliver relevant results to users.

Hardware and Infrastructure: Ensuring that your search engine is hosted on reliable and scalable hardware infrastructure is crucial for optimal performance. Investing in high-performance servers, load balancers, and optimizing network configurations can help handle high traffic volumes and deliver search results quickly.

Testing and Monitoring: Regularly testing and monitoring the performance of your search engine is essential for identifying bottlenecks and areas for improvement. Implementing performance testing tools and monitoring systems can help you identify performance issues and make necessary optimizations to enhance the speed and efficiency of your search engine.

By focusing on performance optimization, you can create a search engine that delivers fast and accurate search results, providing a seamless user experience and ensuring the success of your search engine project. Remember, continuous monitoring and optimization are key to maintaining optimal performance over time.

Deploying Your Search Engine

After putting in all the hard work and effort to create your search engine, the next step is deploying it for users to access and utilize. Deploying your search engine involves making it accessible on the internet and ensuring its smooth functionality. One crucial aspect of deploying your search engine is choosing the right server to host it. The server you select should have the necessary resources and capabilities to handle the traffic and data requirements of your search engine. Additionally, you will need to configure the domain for your search engine, ensuring that it is properly linked and accessible to users. By carefully considering these deployment aspects, you can ensure that your search engine is up and running smoothly, providing users with a seamless search experience.

Choosing a Server

Choosing a Server: When it comes to creating a search engine, selecting the right server is crucial for ensuring optimal performance and reliability. There are several factors to consider when choosing a server for your search engine project:

1. Scalability: Your search engine may start small, but as it gains popularity and the amount of data it needs to handle increases, scalability becomes essential. Look for a server that allows you to easily scale up your resources, whether it’s adding more processing power, storage capacity, or bandwidth.

2. Speed and Performance: A fast and responsive server is vital for delivering search results quickly. Opt for a server with high-speed processors, sufficient RAM, and solid-state drives (SSDs) for faster data retrieval.

3. Reliability: Search engines need to be available 24/7 to handle user queries. Choose a server with a reliable uptime guarantee and robust hardware to minimize downtime and ensure uninterrupted service.

4. Security: Protecting user data and maintaining the security of your search engine is paramount. Look for a server with robust security features, such as firewalls, intrusion detection systems, and regular security updates.

5. Cost: Consider your budget when selecting a server. Compare different hosting providers and their pricing plans to find a balance between cost and the features you require for your search engine.

6. Support: Choose a server provider that offers excellent customer support. In case you encounter any technical issues or need assistance with server management, having reliable support can be invaluable.

The server you choose will have a significant impact on the performance, scalability, and reliability of your search engine. Take the time to research and evaluate different server options to ensure you make an informed decision that aligns with your project’s requirements and goals. By selecting the right server, you can lay a solid foundation for your search engine’s success.

Domain Configuration

Domain Configuration:

Domain configuration is a crucial step in deploying your search engine. It involves setting up your domain name and ensuring that it is properly connected to your search engine server. Here are some key considerations for domain configuration:

1. Registering a Domain: Start by choosing a unique and memorable domain name for your search engine. Register the domain through a reliable domain registrar. Consider using keywords relevant to your search engine to improve its visibility in search results.

2. DNS Configuration: Once you have registered your domain, you need to configure the Domain Name System (DNS) settings. DNS translates your domain name into an IP address, allowing users to access your search engine. Configure the DNS settings to point your domain to the IP address of your search engine server.

3. SSL Certificate: Consider obtaining an SSL certificate for your search engine. An SSL certificate ensures that the user’s connection to your search engine is secure and encrypted. This is particularly important if your search engine requires users to input sensitive information.

4. Subdomains: If you want to create subdomains for different sections or functionalities of your search engine, configure them accordingly. Subdomains can help organize and structure your search engine’s features.

5. Domain Redirects: If you have previously used a different domain or if you want to redirect users from an old domain to your new search engine, set up domain redirects appropriately. This ensures that any traffic directed to the old domain is automatically redirected to the new one.

6. Domain Renewal: Keep track of your domain’s expiration date and renew it in a timely manner to prevent any disruption in your search engine’s availability.

Proper domain configuration is essential for ensuring the accessibility and functionality of your search engine. Take the time to carefully configure your domain settings to ensure a seamless user experience and maximize the reach of your search engine.

Conclusion

In conclusion, creating a search engine may seem like a daunting task, but with a step-by-step guide, it becomes an achievable endeavor. Understanding the inner workings of search engines, choosing the right programming language, gathering data, building the index, implementing ranking algorithms, designing the user interface, testing and optimizing, and deploying your search engine are all crucial steps in the process.

By comprehending the concepts of crawling, indexing, ranking, and search queries, you have gained valuable insights into how search engines function. These insights will help you make informed decisions and ensure the effectiveness and efficiency of your search engine.

It’s important to stay updated on the latest trends and advancements in search engine technology to keep your search engine competitive and provide users with the best possible experience. Remember to continuously test and optimize your search engine to improve its performance and user satisfaction.

Creating your own search engine is an exciting journey that requires dedication, knowledge, and perseverance. By following this step-by-step guide, you can turn your dream of having your own search engine into a reality. So, what are you waiting for? Start your journey and create a search engine that will revolutionize the way people find information on the internet.

Frequently Asked Questions

What is web scraping?

Web scraping is the process of extracting data from websites. It involves using automated tools or scripts to access and gather information from web pages, such as text, images, and links.

What is API integration?

API integration refers to the process of connecting and integrating data from external sources using application programming interfaces (APIs). APIs allow different systems to communicate and exchange data seamlessly.

What is document parsing?

Document parsing is the process of analyzing the structure and content of documents, such as web pages or text files. It involves extracting meaningful information and organizing it in a structured format for further processing.

What is inverted indexing?

Inverted indexing is a technique used by search engines to efficiently store and retrieve information from their index. It involves creating an index that maps keywords or terms to the web pages or documents where they appear.

What is the PageRank algorithm?

The PageRank algorithm is a ranking algorithm developed by Google. It analyzes the links between web pages to determine their importance and relevance. Pages with more inbound links from reputable websites are considered more authoritative and tend to rank higher in search results.

What is TF-IDF?

TF-IDF stands for Term Frequency-Inverse Document Frequency. It is a numerical statistic used to measure the importance of a term within a document or a collection of documents. TF-IDF takes into account both the frequency of a term in a document and its rarity in the entire collection.

What is front-end development?

Front-end development refers to the process of creating and implementing the user interface of a website or application. It involves designing and coding the visual elements that users interact with, such as menus, buttons, and forms.

What is search functionality?

Search functionality refers to the ability of a website or application to allow users to search for specific content or information. It involves implementing a search bar, processing search queries, and presenting relevant results to the users.

What is quality assurance?

Quality assurance is the process of ensuring that a software or system meets specified requirements and quality standards. It involves testing and reviewing the functionality, usability, and performance of the search engine to identify and fix any issues or bugs.

What is performance optimization?

Performance optimization involves improving the speed, efficiency, and overall performance of a search engine. It includes techniques such as code optimization, caching, and server tuning to enhance the user experience and reduce response times.