Log files are records of server activity that track the interactions between a website and its visitors. They are important for SEO because they provide valuable data on how search engine bots crawl and index the site, identify technical issues that may affect its performance, and offer insights into user behavior.

Why Log File Analysis is Important for SEO and Online Marketing

– Provides insights into search engine crawling and indexing: Log file analysis allows businesses to understand how search engine bots crawl and index their websites. By examining the log files, you can identify which pages are being crawled frequently, how often search engines visit your site, and the patterns of indexing. This information is crucial for optimizing your website’s structure and ensuring that search engines are able to easily access and index your content.

– Identifies and resolves technical issues: Log file analysis helps in identifying and resolving technical issues that may hinder your website’s performance in search engine rankings. By analyzing the log files, you can uncover crawl errors, server errors, and redirect issues that may negatively impact your SEO efforts. Fixing these issues promptly can improve your website’s visibility and user experience.

– Optimizes crawl budget: Log file analysis allows you to understand how search engine bots allocate crawl budget to your website. By analyzing the log files, you can identify pages that receive excessive crawling and those that are not being crawled frequently. This information helps you optimize your crawl budget by prioritizing the crawling of important pages and reducing unnecessary crawling on low-value pages.

– Improves keyword targeting: Log file analysis provides valuable insights into the keywords and phrases that users are using to find your website. By examining the log files, you can identify the search queries that are driving organic traffic to your site, allowing you to optimize your content and target relevant keywords. This can help improve your website’s visibility in search engine results pages (SERPs) and attract more qualified traffic.

– Enhances user experience: Log file analysis helps in understanding user behavior on your website. By examining the log files, you can analyze user agent data, including the devices, browsers, and operating systems used by your visitors. This information can help you optimize your website for different user agents, ensuring a seamless user experience across various platforms.

Log file analysis is a powerful tool for SEO and online marketing success. It provides valuable insights into website performance, search engine crawling and indexing, technical issues, keyword targeting, and user behavior. By leveraging the information obtained from log file analysis, businesses can make informed decisions, optimize their websites, and achieve better visibility and rankings in search engine results.

1. Understanding Log Files and Their Role

Understanding log files and their role is essential for effective log file analysis in SEO and online marketing. Log files are generated by web servers and contain a detailed record of every request made to a website. They provide valuable information about user activity, server responses, and search engine bot behavior.

The role of log files in SEO and online marketing is multifaceted. Firstly, log files help in monitoring website performance by providing insights into server errors, response times, and resource usage. This information allows businesses to identify and address any technical issues that may be affecting user experience and search engine visibility.

Secondly, log files play a crucial role in understanding how search engine bots crawl and index a website. By analyzing the log files, businesses can gain insights into the frequency of crawls, the pages being crawled, and the patterns of indexing. This information helps in optimizing website structure, ensuring that important pages are crawled and indexed appropriately.

Log files provide valuable data for keyword research and targeting. By analyzing the search queries made by users, businesses can identify popular keywords and phrases that are driving organic traffic to their site. This information can be used to optimize content, improve keyword targeting, and attract more relevant traffic.

Understanding log files and their role is fundamental to successful log file analysis. It enables businesses to uncover valuable insights about website performance, search engine crawling and indexing, and user behavior. By leveraging this information, businesses can make informed decisions, optimize their websites, and achieve better SEO and online marketing results.

2. Benefits of Log File Analysis for SEO

Log file analysis offers several benefits for SEO that can significantly impact a website’s performance and visibility in search engine results. Here are some key benefits of log file analysis for SEO:

– Identifying crawl budget optimization opportunities: Log file analysis allows businesses to understand how search engine crawlers allocate their crawl budget. By analyzing log files, you can identify pages that receive excessive crawling and those that are not being crawled frequently. This information helps you optimize your crawl budget by prioritizing the crawling of important pages and reducing unnecessary crawling on low-value pages. This optimization can lead to better indexing and visibility of your most valuable content.

– Uncovering technical issues: Log files provide a wealth of information about a website’s technical health. By analyzing log files, you can identify crawl errors, server errors, and redirect issues that may negatively impact your SEO efforts. Fixing these issues promptly can improve your website’s visibility and ensure a positive user experience. For example, if you discover server errors in the log files, you can take immediate action to resolve them and prevent search engine bots from encountering these errors when crawling your site.

– Improving keyword targeting: Log file analysis provides insights into the search queries that drive organic traffic to your website. By examining the log files, you can identify the keywords and phrases users are using to find your site. This information helps you optimize your content and target relevant keywords that align with user intent. By incorporating these keywords strategically into your website’s content, meta tags, and headings, you can improve your website’s visibility in search engine results and attract more qualified traffic.

– Enhancing website structure and navigation: Log file analysis can help you understand how search engine bots crawl and navigate your website. By examining the log files, you can identify the most frequently crawled pages, the depth of crawl, and the patterns of indexing. This information enables you to optimize your website’s structure and navigation, ensuring that search engines can easily access and index your content. For example, if you notice that certain important pages are not being crawled frequently, you can adjust your internal linking structure to improve their visibility and accessibility.

– Optimizing for user experience: Log file analysis provides insights into user behavior, including the devices, browsers, and operating systems used by your visitors. By analyzing user agent data in the log files, you can optimize your website for different user agents, ensuring a seamless user experience across various platforms. For example, if you discover that a significant portion of your traffic comes from mobile devices, you can prioritize mobile optimization efforts to deliver a responsive and user-friendly experience to mobile users.

Log file analysis is a valuable SEO tool that offers numerous benefits for improving website performance, identifying technical issues, optimizing keyword targeting, enhancing website structure, and optimizing user experience. By leveraging the insights gained from log file analysis, businesses can make data-driven decisions, improve their SEO strategies, and achieve better rankings and visibility in search engine results.

How to Analyze Log Files

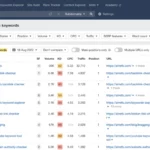

– Setting Up Log File Analysis Tools: The first step in analyzing log files is to set up the necessary tools. There are various log file analysis tools available, such as Google Search Console, Screaming Frog Log File Analyzer, and Splunk. Choose a tool that suits your needs and follow the instructions provided to set it up properly.

– Choosing the Right Log File Format: Log files can be generated in different formats, such as Apache, Nginx, or IIS. It is essential to choose the correct log file format based on the web server you are using. Each format has its own unique structure and data, so selecting the right format ensures accurate analysis of the log files.

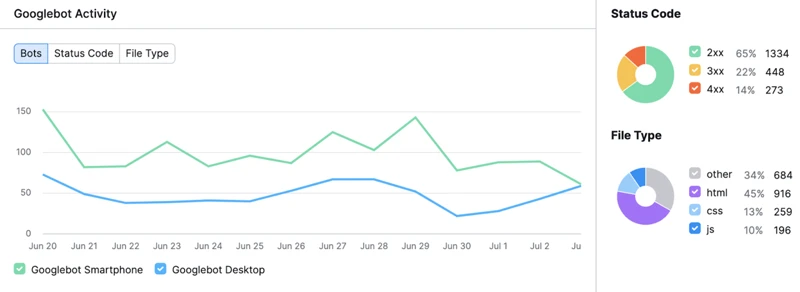

– Identifying Important Metrics in Log Files: Once you have set up the log file analysis tool and selected the appropriate log file format, it’s time to identify the important metrics to focus on. Some key metrics to consider include crawl frequency, response codes, user agents, URLs visited, and referrers. These metrics provide valuable insights into how search engines are interacting with your website.

– Analyzing Crawling and Indexing Patterns: Analyzing crawling and indexing patterns is a crucial step in log file analysis. Look for patterns in the log files that indicate how search engine bots are crawling your site. Pay attention to the frequency of crawls, the pages being crawled, and any anomalies or irregularities. Understanding these patterns can help you optimize your website’s structure and ensure search engines are able to index your content effectively.

– Identifying Crawl Budget Issues: Crawl budget refers to the number of pages search engine bots are willing to crawl on your website within a given timeframe. Analyzing log files can help identify crawl budget issues, such as excessive crawling on low-value pages or pages that are not being crawled at all. By identifying and resolving crawl budget issues, you can ensure that search engines are focusing their efforts on the most important pages of your website.

– Finding and Fixing Redirect Issues: Log file analysis can help uncover redirect issues that may be affecting your website’s SEO performance. Look for response codes indicating redirects (such as 301 or 302) and examine the URLs involved. Redirect issues can negatively impact user experience and search engine rankings, so it’s important to fix them promptly.

– Identifying and Resolving Server Errors: Server errors, such as 500 Internal Server Error or 503 Service Unavailable, can hinder search engine crawling and indexing. Analyzing log files can help identify server errors and their frequency. Once identified, take necessary steps to resolve these errors promptly to ensure smooth website functioning and improved SEO performance.

– Analyzing User Agent Data: User agent data provides insights into the devices, browsers, and operating systems used by your website visitors. Analyzing user agent data from log files can help you understand the preferences of your audience and optimize your website accordingly. Ensure that your website is compatible with popular user agents to enhance the user experience and drive more traffic.

By following these steps, you can effectively analyze log files and gain valuable insights for improving your SEO and online marketing strategies.

1. Setting Up Log File Analysis Tools

Setting up log file analysis tools is the first step in analyzing log files for SEO and online marketing success. Here are the key steps to follow:

1. Choose a log file analysis tool: There are several log file analysis tools available in the market, each with its own features and capabilities. Some popular options include Screaming Frog Log File Analyzer, Logz.io, and AWStats. Research and choose a tool that best suits your needs and budget.

2. Install the log file analysis tool: Once you have chosen a tool, follow the installation instructions provided by the tool’s documentation. This usually involves downloading the tool, running the installer, and configuring any necessary settings.

3. Access log files: Log files are typically stored on your web server. To access them, you can use an FTP client or a file manager provided by your hosting provider. Locate the log files for your website and download them to your local machine.

4. Configure the log file analysis tool: Open the log file analysis tool and configure it to analyze the downloaded log files. This usually involves specifying the location of the log files, selecting the desired settings, and setting up any necessary filters or exclusions.

5. Import log files: Use the tool’s import function to load the log files into the analysis tool. This may involve selecting the log file format, specifying the log file location, and importing the files.

6. Analyze the log files: Once the log files are imported, you can start analyzing them. Explore the different features and functionalities of the tool to gain insights into your website’s performance, search engine crawling, user behavior, and other important metrics.

By setting up log file analysis tools, you can effectively analyze and interpret log file data to make data-driven decisions for your SEO and online marketing strategies.

2. Choosing the Right Log File Format

When it comes to log file analysis, choosing the right log file format is essential for accurate and effective analysis. Here are some factors to consider when selecting the appropriate log file format:

1. Server log file format: The server log file format determines how the log data is recorded and organized. Common log file formats include Apache Common Log Format (CLF), Extended Log Format (ELF), and Common Logfile Format (CLF). Each format has its own advantages and disadvantages, so it’s important to choose one that aligns with your specific needs and the capabilities of your log analysis tools.

2. Data granularity: The log file format should provide sufficient data granularity to extract valuable insights. This includes information such as the date and time of each request, the requested URL, the HTTP response code, and the user agent string. The more detailed the log file format, the more comprehensive the analysis can be.

3. Compatibility with log analysis tools: Ensure that the log file format is compatible with the log analysis tools you plan to use. Different tools may have specific requirements for log file formats, so it’s important to check the documentation or consult with the tool’s support team to ensure compatibility.

4. Log file size: Consider the size of your log files and the storage capacity available. Some log file formats, such as the Combined Log Format (CLF), may result in larger file sizes due to the additional information recorded. If you have limited storage space, consider a more compressed log file format.

5. Accessibility and ease of parsing: Choose a log file format that is easily accessible and can be parsed efficiently. This will make the analysis process smoother and more time-effective. Some log file formats, like the Common Logfile Format (CLF), have a simple structure that can be easily parsed using regular expressions or log analysis tools.

By carefully considering these factors, you can choose the right log file format that best suits your needs and facilitates accurate and insightful log file analysis. Remember, the chosen format will impact the quality and depth of the insights you gain from your log file analysis.

3. Identifying Important Metrics in Log Files

When analyzing log files for SEO and online marketing success, it is important to identify and focus on key metrics that provide valuable insights into website performance. Here are some important metrics to consider:

– Crawl Frequency: Analyzing log files helps you understand how often search engine bots crawl your website. By identifying the pages that are crawled frequently, you can ensure that your most important content is being regularly indexed and updated. This can improve your website’s visibility in search engine results.

– Response Codes: Log files contain information about the HTTP response codes received by search engine bots when accessing your website. Monitoring these response codes, such as 200 (OK), 404 (Not Found), or 500 (Server Error), can help you identify and address any technical issues affecting your website’s performance.

– Page Load Time: Log files can provide insights into the time it takes for your webpages to load. By analyzing this metric, you can identify slow-loading pages and take steps to optimize their performance. Faster page load times can improve user experience and positively impact your website’s search engine rankings.

– URL Structure: Log file analysis allows you to examine the structure of your website’s URLs. By understanding how search engine bots navigate and crawl your URLs, you can optimize your website’s structure for better indexing and ranking. This includes identifying any URL parameters or URL patterns that may need to be addressed.

– Referring Pages: Log files provide information on the referring pages that drive traffic to your website. By analyzing this data, you can identify which external sources are sending the most traffic and focus your marketing efforts accordingly. This can help you optimize your link-building strategies and target high-value referral sources.

– User Agents: Log files contain data on the user agents accessing your website, including information about the devices, browsers, and operating systems used. Analyzing this information can help you understand your audience’s preferences and optimize your website’s design and functionality accordingly.

By identifying and analyzing these important metrics in log files, you can gain valuable insights into your website’s performance, identify areas for improvement, and optimize your SEO and online marketing strategies accordingly.

4. Analyzing Crawling and Indexing Patterns

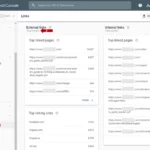

– Identify frequently crawled pages: Analyzing crawling and indexing patterns in log files allows you to identify which pages on your website are being crawled most frequently by search engine bots. This information helps you understand which pages are considered important by search engines and can guide you in optimizing those pages further for improved visibility in search engine results.

– Spot irregular crawling behavior: Log file analysis enables you to identify any irregular crawling patterns that may be occurring on your website. For example, you may notice that certain pages are being crawled excessively, which can indicate a potential issue such as infinite crawling loops or duplicate content problems. By identifying and addressing these irregularities, you can ensure that search engine bots are efficiently crawling your website and not wasting resources on unnecessary pages.

– Monitor indexing frequency: Analyzing log files allows you to monitor the frequency at which search engines are indexing your web pages. This information can be valuable in determining how quickly your new content is being discovered and indexed by search engines. If you notice a delay in indexing or certain pages not being indexed at all, you can investigate the issue further and take steps to ensure proper indexing of your content.

– Identify low-value pages: Log file analysis helps you identify pages on your website that receive minimal or no crawling from search engine bots. These low-value pages may not be contributing to your overall SEO efforts and can be optimized or removed, freeing up crawl budget for more important pages. By focusing on optimizing the pages that matter most, you can improve the overall performance and visibility of your website in search engine results.

– Track crawl frequency over time: By analyzing log files, you can track the crawl frequency of your website over time. This information allows you to identify any changes in crawling patterns and understand how search engines are interacting with your site. For example, you may notice an increase in crawling after publishing new content or a decrease in crawling during periods of site maintenance. Understanding these patterns can help you make informed decisions about your SEO strategy and content creation schedule.

Analyzing crawling and indexing patterns through log file analysis provides valuable insights into how search engine bots interact with your website. By understanding which pages are crawled frequently, spotting irregularities, monitoring indexing frequency, identifying low-value pages, and tracking crawl frequency over time, you can optimize your website for improved search engine visibility and performance.

5. Identifying Crawl Budget Issues

Identifying crawl budget issues is a crucial step in log file analysis for SEO and online marketing success. Here are some key points to consider:

– Understand crawl budget: Crawl budget refers to the number of pages search engine bots are willing to crawl on your website within a given time frame. It is important to ensure that search engines are spending their crawl budget wisely on your most important and valuable pages.

– Analyze crawl frequency: By examining the log files, you can determine how often search engine bots are crawling your website. Look for patterns and fluctuations in crawl frequency to identify any crawl budget issues. If certain pages are being crawled too frequently or not at all, it may indicate a problem with crawl budget allocation.

– Identify low-value pages: Log file analysis can help you identify low-value pages that may be consuming a significant portion of your crawl budget. These pages may include duplicate content, thin content, or pages with little organic traffic. By identifying and optimizing or removing these low-value pages, you can free up crawl budget for more important pages.

– Optimize important pages: Log file analysis allows you to identify the pages that are most important for your website’s SEO and online marketing success. These may include key landing pages, product pages, or content that drives organic traffic. By ensuring these pages are being crawled frequently and efficiently, you can maximize their visibility in search engine results.

– Fix crawl budget issues: Once you have identified crawl budget issues, it’s important to take action to resolve them. This may involve optimizing your website’s internal linking structure, implementing canonical tags to consolidate duplicate content, or using crawl directives like “noindex” or “nofollow” to control which pages search engines should crawl.

By identifying and addressing crawl budget issues, you can ensure that search engine bots are spending their time and resources effectively on your website. This can lead to improved crawling, indexing, and ultimately, better search engine rankings and online marketing success.

6. Finding and Fixing Redirect Issues

– Identify redirect issues: Log file analysis enables you to identify any redirect issues on your website. By examining the log files, you can pinpoint instances where users are being redirected from one URL to another. This could be due to broken or outdated links, incorrect redirects, or other technical issues. Identifying these redirect issues is crucial as they can negatively impact your website’s SEO performance and user experience.

– Analyze redirect patterns: Log file analysis allows you to analyze the patterns of redirects on your website. You can determine the frequency and types of redirects occurring, such as 301 permanent redirects, 302 temporary redirects, or 404 error redirects. Understanding these redirect patterns helps you identify any inconsistencies or excessive redirects that may need to be addressed.

– Fix redirect errors: Once you have identified redirect issues through log file analysis, it’s important to take corrective measures. You can update or fix broken links, ensure proper redirect implementation, or address any other technical issues causing the redirects. By resolving these redirect errors, you can improve user experience, prevent loss of link equity, and ensure that search engines can properly crawl and index your website.

– Monitor redirect effectiveness: Log file analysis allows you to monitor the effectiveness of your redirects over time. By regularly analyzing the log files, you can track the number of redirects occurring, evaluate their impact on user behavior and website performance, and make necessary adjustments if needed. This helps you ensure that your redirects are functioning as intended and are benefiting your website’s SEO and online marketing efforts.

– Utilize redirect best practices: Log file analysis provides valuable insights into the effectiveness of your redirect strategy. Based on the data obtained from the log files, you can assess whether your redirects are aligned with best practices, such as using 301 redirects for permanent URL changes or implementing canonical tags to consolidate duplicate content. By following redirect best practices, you can optimize your website’s SEO performance and prevent any negative consequences from incorrect or excessive redirects.

Finding and fixing redirect issues is essential for maintaining a healthy website and optimizing your SEO efforts. Log file analysis allows you to identify redirect issues, analyze redirect patterns, fix redirect errors, monitor redirect effectiveness, and utilize redirect best practices. By taking proactive measures to address redirect issues, you can improve user experience, preserve link equity, and ensure that search engines can effectively crawl and index your website.

7. Identifying and Resolving Server Errors

– Identifying server errors: Log file analysis helps in identifying server errors that may occur when search engine bots try to access your website. Server errors, such as 500 Internal Server Error or 503 Service Unavailable, can negatively impact your website’s visibility and accessibility. By examining the log files, you can identify the URLs that return server errors and determine the frequency and severity of these errors.

– Resolving server errors: Once server errors have been identified, it is important to resolve them promptly to ensure that search engine bots can access and index your website properly. Common solutions for server errors include checking server configurations, fixing coding issues, and optimizing website infrastructure. By addressing server errors, you can improve your website’s performance, user experience, and search engine rankings.

– Monitoring server error patterns: Log file analysis allows you to monitor server error patterns over time. By analyzing the log files, you can identify any recurring server errors and investigate the root causes. This information helps in identifying and addressing underlying issues that may be affecting your website’s performance and visibility.

– Preventing future server errors: Log file analysis provides insights into potential areas for improvement to prevent future server errors. By examining the log files, you can identify any issues with server response times, server configurations, or website infrastructure that may lead to server errors. Taking proactive measures to address these issues can help prevent server errors and ensure smooth website operation.

Resolving server errors is crucial for maintaining a healthy website and ensuring optimal search engine performance. By using log file analysis to identify and resolve server errors, businesses can improve their website’s accessibility, enhance user experience, and boost search engine rankings. Regular monitoring and proactive measures can help prevent server errors and ensure the smooth operation of your website.

8. Analyzing User Agent Data

Analyzing user agent data is a crucial step in log file analysis for SEO and online marketing. By examining the user agent information recorded in the log files, you can gain valuable insights into the devices, browsers, and operating systems used by your website visitors. Here are some key reasons why analyzing user agent data is important:

– Optimizing for different devices: User agent data allows you to understand the devices your visitors are using to access your website. This information helps you optimize your website’s design and functionality for different devices, such as desktops, mobile phones, and tablets. By providing a seamless user experience across devices, you can improve engagement and conversions.

– Testing website compatibility: User agent data helps you identify the browsers and operating systems that your visitors are using. This information is valuable for testing your website’s compatibility across different browser versions and operating systems. By ensuring that your website is compatible with popular browsers and operating systems, you can prevent any potential issues that may arise and provide a smooth browsing experience for your users.

– Understanding user behavior: User agent data can provide insights into how users interact with your website based on their devices and browsers. For example, you can identify if certain devices or browsers have higher bounce rates or longer session durations. This information can help you make data-driven decisions to improve user experience and optimize your website’s conversion funnel.

– Targeting specific user segments: Analyzing user agent data allows you to identify specific user segments based on their devices and browsers. For instance, if you notice a significant portion of your traffic comes from mobile users, you can prioritize mobile optimization and tailor your content or marketing campaigns to cater to this specific segment.

– Monitoring emerging trends: User agent data can provide insights into emerging trends in device usage and browser preferences among your website visitors. By staying updated on these trends, you can adapt your online marketing strategies accordingly and ensure your website is optimized for the latest technologies and user preferences.

Analyzing user agent data is crucial for optimizing your website’s performance, user experience, and online marketing efforts. By understanding the devices, browsers, and operating systems used by your visitors, you can tailor your website to meet their needs, improve compatibility, and stay ahead of emerging trends. By leveraging the insights gained from user agent analysis, you can enhance user satisfaction, increase engagement, and drive better results for your SEO and online marketing campaigns.

Common Challenges and Solutions

– Dealing with Large Log Files: One common challenge in log file analysis is handling large log files, which can be difficult to process and analyze. To overcome this challenge, you can use log file analysis tools that are specifically designed to handle large volumes of data. These tools can help in efficiently parsing and analyzing log files, making the process more manageable. Additionally, you can consider using log file compression techniques to reduce the file size and make it easier to work with.

– Understanding Log File Formats: Log files can come in various formats, such as Apache, Nginx, or IIS. Each format has its own specific syntax and structure, which can pose a challenge when trying to analyze the data. To overcome this challenge, it is important to familiarize yourself with the log file format you are working with. Understanding the format will help you correctly interpret and extract the necessary data from the log files.

– Interpreting Log File Data: Another challenge in log file analysis is interpreting the data and extracting meaningful insights. Log files contain a wealth of information, but it can be overwhelming to analyze without a clear understanding of what to look for. To overcome this challenge, it is important to define specific metrics and goals before analyzing the log files. This will help you focus on the key data points that align with your SEO and marketing objectives.

– Visualizing Log File Data: Log files often contain large amounts of raw data, which can be difficult to interpret and present in a meaningful way. To overcome this challenge, you can use data visualization tools to create charts, graphs, and reports that make it easier to understand the patterns and trends in the log file data. Visual representations of the data can help in identifying issues, tracking progress, and communicating insights to stakeholders.

– Ensuring Data Accuracy: Log files can sometimes contain inaccurate or incomplete data, which can affect the analysis and decision-making process. To ensure data accuracy, it is important to regularly check and validate the log files for any inconsistencies or errors. Additionally, cross-referencing the log file data with other analytics tools, such as Google Analytics, can help in verifying the accuracy of the data and gaining a more comprehensive understanding of website performance.

Log file analysis comes with its own set of challenges, including handling large log files, understanding different log file formats, interpreting the data, visualizing the data, and ensuring data accuracy. However, by using appropriate tools, familiarizing yourself with the log file format, defining specific metrics, utilizing data visualization techniques, and validating the data, you can overcome these challenges and effectively analyze log files for SEO and online marketing success.

1. Dealing with Large Log Files

When dealing with large log files, it’s important to have a strategy in place to effectively analyze and extract valuable insights. Here are some tips for dealing with large log files:

– Use log file analysis tools: Utilize log file analysis tools that are designed to handle large volumes of data. These tools can help you process and analyze log files more efficiently, saving you time and effort. Some popular log file analysis tools include Splunk, ELK Stack, and Google BigQuery.

– Filter and segment data: Instead of analyzing the entire log file, focus on specific time periods or sections that are relevant to your analysis. This can help reduce the size of the log file and make it more manageable. You can use filters to extract specific information, such as URLs, user agents, or response codes, that are important for your analysis.

– Aggregate data: Rather than analyzing individual log entries, consider aggregating the data to identify patterns and trends. This can help you gain a broader understanding of your website’s performance and user behavior. Aggregation can be done by grouping data based on specific criteria, such as IP addresses, user agents, or URLs.

– Optimize storage and processing: When working with large log files, ensure that you have enough storage space and processing power to handle the data. Consider using cloud-based storage solutions or distributed computing platforms to efficiently manage and process the log files. This can help speed up the analysis process and avoid any limitations imposed by local hardware.

– Consider sampling: If the log file is too large to analyze in its entirety, you can consider taking a representative sample of the data. Sampling involves selecting a subset of log entries that accurately represent the overall log file. This can help you derive insights from a smaller dataset while still maintaining a reasonable level of accuracy.

Dealing with large log files can be challenging, but with the right approach and tools, it is possible to extract valuable insights efficiently. By implementing these strategies, you can effectively analyze large log files and uncover important information that can contribute to your SEO and online marketing success.

2. Understanding Log File Formats

Understanding log file formats is essential for effective log file analysis. Log files come in various formats, and each format has its own structure and information. Here are some common log file formats used in SEO and online marketing:

– Common Log Format (CLF): The Common Log Format is a basic log file format that includes specific fields such as the IP address of the visitor, the date and time of the request, the requested URL, the HTTP status code, and the number of bytes transferred. CLF is widely supported by web servers and is relatively easy to read and analyze.

– Combined Log Format: The Combined Log Format is an extension of the Common Log Format and includes additional fields such as the referrer URL, the user agent string, and the cookie information. This format provides more comprehensive data for analyzing user behavior and tracking traffic sources.

– Extended Log Format (ELF): The Extended Log Format is a customizable log file format that allows website owners to add additional fields based on their specific requirements. ELF provides flexibility in capturing and analyzing data, but it may require more advanced parsing techniques.

– W3C Extended Log Format: The W3C Extended Log Format is a standardized log file format developed by the World Wide Web Consortium (W3C). It includes a wide range of fields, such as the user’s browser, operating system, and even the screen resolution. This format provides detailed information for in-depth analysis of user behavior.

– Apache/Nginx Access Logs: Apache and Nginx are popular web server software that generate access logs in their own formats. These logs contain valuable information such as the requested URL, the user agent, and the response time. Understanding the specific log file format used by your web server is crucial for accurate analysis.

To effectively analyze log files, it is important to understand the format in which the log files are generated. This knowledge will help you choose the right log file analysis tools and techniques and ensure accurate interpretation of the data. By familiarizing yourself with different log file formats, you can optimize your log file analysis process and gain valuable insights for improving your SEO and online marketing strategies.

3. Interpreting Log File Data

Interpreting log file data is a crucial step in log file analysis for SEO and online marketing. The data contained in log files provides valuable insights into the behavior of search engine bots and website visitors. Here are some key aspects to consider when interpreting log file data:

– Crawl frequency: Analyzing log files can help you determine how frequently search engine bots crawl your website. By examining the number of requests made by bots within a specific time frame, you can identify the pages that are being crawled more frequently and those that are not receiving enough attention. This information allows you to optimize your website’s structure and ensure that important pages are crawled and indexed by search engines.

– Response codes: Log files provide information about the response codes returned by your server for each request made by search engine bots or users. These codes indicate the status of the request, such as 200 for a successful request or 404 for a page not found. By analyzing the response codes, you can identify any server errors or broken links that may negatively affect your website’s performance in search engine rankings. Fixing these issues can improve user experience and prevent potential penalties from search engines.

– User agent data: Log files contain user agent information, which includes details about the devices, browsers, and operating systems used by your website visitors. Analyzing this data can help you understand your audience better and optimize your website accordingly. For example, if you notice a significant number of visitors using mobile devices, you can ensure that your website is mobile-friendly and provides a seamless experience on smaller screens.

– Referrer data: Log files also provide information about the sources that are driving traffic to your website. By examining the referrer data, you can identify the websites, search engines, or social media platforms that are referring visitors to your site. This information can help you assess the effectiveness of your marketing campaigns and make informed decisions about where to allocate your resources.

Interpreting log file data requires careful analysis and understanding of the different metrics and indicators present in the files. By gaining insights into crawl frequency, response codes, user agent data, and referrer data, you can make informed decisions to optimize your website’s performance, improve user experience, and enhance your overall SEO and online marketing strategies.

Conclusion

– Log file analysis is a critical component of SEO and online marketing success. By examining log files, businesses can gain valuable insights into website performance, search engine crawling and indexing patterns, technical issues, keyword targeting, and user behavior. This information can be used to optimize websites, improve search engine rankings, and enhance the overall user experience.

– Understanding search engine crawling and indexing is crucial for website optimization. Log file analysis provides insights into how search engine bots crawl and index websites, allowing businesses to optimize their website structure, ensure easy access to content, and improve visibility in search engine results.

– Identifying and resolving technical issues is essential for maintaining a high-performing website. Log file analysis helps in uncovering crawl errors, server errors, and redirect issues that may negatively impact SEO efforts. By promptly addressing these issues, businesses can improve website visibility and user experience.

– Optimizing crawl budget can maximize the efficiency of search engine crawling. Log file analysis allows businesses to identify pages that receive excessive crawling and those that are not being crawled frequently. By optimizing the crawl budget, businesses can prioritize the crawling of important pages and reduce unnecessary crawling on low-value pages.

– Keyword targeting plays a vital role in attracting relevant traffic to a website. Log file analysis provides insights into the keywords and phrases that users are using to find a website. By optimizing content and targeting relevant keywords, businesses can improve visibility in search engine results and attract more qualified traffic.

– Enhancing user experience is essential for retaining and attracting website visitors. Log file analysis helps in understanding user behavior by analyzing user agent data. This information can be used to optimize websites for different user agents, ensuring a seamless user experience across various platforms.

In conclusion, log file analysis is a powerful tool for SEO and online marketing success. It provides valuable insights into website performance, search engine crawling and indexing, technical issues, keyword targeting, and user behavior. By leveraging the information obtained from log file analysis, businesses can make informed decisions, optimize their websites, and achieve better visibility and rankings in search engine results. To learn more about other SEO techniques, check out our article on how to download sitemap.

Frequently Asked Questions

1. What are log files and why are they important for SEO?

Log files are records of server activity that track the interactions between a website and its visitors. They are important for SEO because they provide valuable data on how search engine bots crawl and index the site, identify technical issues that may affect its performance, and offer insights into user behavior.

2. How can log file analysis improve website crawling and indexing?

Log file analysis helps identify patterns in search engine crawling and indexing, allowing website owners to optimize their site’s structure and ensure that search engines can easily access and index their content. By understanding which pages are frequently crawled and how often search engines visit the site, businesses can make informed decisions to improve their website’s visibility and performance.

3. Can log file analysis help identify and fix technical issues?

Yes, log file analysis is a powerful tool for identifying and resolving technical issues. By examining the log files, businesses can uncover crawl errors, server errors, and redirect issues that may impact their SEO efforts. Fixing these issues promptly can improve the website’s visibility and user experience.

4. How can log file analysis optimize crawl budget?

Log file analysis allows businesses to understand how search engine bots allocate crawl budget to their website. By analyzing the log files, website owners can identify pages that receive excessive crawling and those that are not being crawled frequently. This information helps optimize the crawl budget by prioritizing the crawling of important pages and reducing unnecessary crawling on low-value pages.

5. What role does log file analysis play in keyword targeting?

Log file analysis provides insights into the keywords and phrases that users are using to find a website. By examining the log files, businesses can identify the search queries that drive organic traffic, allowing them to optimize their content and target relevant keywords. This can improve the website’s visibility in search engine results pages (SERPs) and attract more qualified traffic.

6. How does log file analysis enhance user experience?

Log file analysis helps in understanding user behavior on a website. By examining the log files, businesses can analyze user agent data, including the devices, browsers, and operating systems used by visitors. This information can help optimize the website for different user agents, ensuring a seamless user experience across various platforms.

7. What challenges can arise when analyzing log files?

Challenges when analyzing log files include dealing with large log files, understanding different log file formats, and interpreting the data accurately. These challenges can be overcome with the right tools, knowledge, and expertise in log file analysis.

8. Are there any specific tools for log file analysis?

Yes, there are several tools available for log file analysis, such as Screaming Frog Log File Analyzer, Logz.io, and ELK Stack. These tools provide advanced features and functionalities to analyze log files effectively and derive actionable insights.

9. How often should log file analysis be performed?

The frequency of log file analysis largely depends on the size and complexity of the website, as well as the level of SEO and online marketing activities. Generally, it is recommended to perform log file analysis on a regular basis, such as monthly or quarterly, to stay updated with the website’s performance and make necessary optimizations.

10. Can log file analysis benefit small businesses as well?

Absolutely! Log file analysis is beneficial for businesses of all sizes, including small businesses. It helps in identifying and fixing technical issues, optimizing crawl budget, improving keyword targeting, and enhancing user experience. By leveraging log file analysis, small businesses can improve their online presence, attract more organic traffic, and achieve better SEO results.